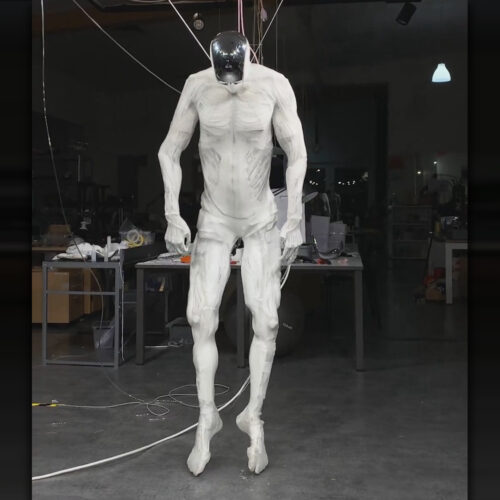

Robot with 1,000 muscles twitches like human while dangling from ceiling

On Wednesday, Clone Robotics released video footage of its Protoclone humanoid robot, a full-body machine that uses synthetic muscles to create unsettlingly human-like movements. In the video, the robot hangs suspended from the ceiling as its limbs twitch and kick, marking what the company claims is a step toward its goal of creating household-helper robots.

Poland-based Clone Robotics designed the Protoclone with a polymer skeleton that replicates 206 human bones. The company built the robot with the hopes that it will one day be able to operate human tools and perform tasks like doing laundry, washing dishes, and preparing basic meals.

The Protoclone reportedly contains over 1,000 artificial muscles built with the company's "Myofiber" technology, which builds on the McKibbin pneumatic muscle concept. These muscles work through mesh tubes containing balloons that contract when filled with hydraulic fluid, mimicking human muscle function. A 500-watt electric pump serves as the robot's "heart," pushing fluid at 40 standard liters per minute.

© Clone Robotics