AMD’s Radeon RX 9060 XT Could Do Budget GPUs Better Than Nvidia

We’ll need to see if a 9060 XT can beat the RTX 5060 Ti, but what's more important is if it manages to maintain its price after launch.

On Wednesday, the Trump administration announced plans to rescind and replace a Biden-era rule regulating the export of high-end AI accelerator chips worldwide, Bloomberg and Reuters reported.

A Department of Commerce spokeswoman told Reuters that officials found the previous framework "overly complex, overly bureaucratic, and would stymie American innovation" and pledged to create "a much simpler rule that unleashes American innovation and ensures American AI dominance."

The Biden administration issued the Framework for Artificial Intelligence Diffusion in January during its final week in office. The regulation represented the last salvo of a four-year effort to control global access to so-called "advanced" AI chips (such as GPUs made by Nvidia), with a focus on restricting China's ability to obtain tech that could enhance its military capabilities.

© SEAN GLADWELL via Getty Images

Kevin Dietsch/Getty Images

Nvidia CEO Jensen Huang has said it would be a "tremendous loss" for his chip company to lose access to a rapidly growing AI market in China that he estimates to soon be worth $50 billion.

In an interview with CNBC on Tuesday, the Nvidia boss addressed concerns about the growing restrictions facing his company as the US government seeks to clamp down on the sale of its high-performing AI chips to China.

According to Huang, "China is a very large market" that will present a $50 billion addressable market within the next two to three years.

"It would be a tremendous loss not to be able to address it as an American company," Huang said.

"It's going to bring back revenues, it's going to bring back taxes, it's going to create lots of jobs here in the United States," he added.

Nvidia has added trillions of dollars in value since the release of ChatGPT in late 2022, as AI companies in the US, China, and elsewhere have sought its chips, known as GPUs, to train and host increasingly smarter AI models.

However, Nvidia's boom in the generative AI era has taken a hit in recent months, with the company's share price down almost 18% year-to-date.

One of the biggest concerns facing Nvidia investors has been the potential long-term impact of President Donald Trump's tariff regime and export controls on advanced technologies to China.

In its last earnings, Nvidia reported $17.1 billion in revenue from China for its last fiscal year, marking a 66% increase from the $10.3 billion it generated the year before.

However, last month, the company disclosed a $5.5 billion hit to earnings due to restrictions on sales of its H20 chips to China.

In the interview with CNBC, Huang acknowledged the earnings hit disclosed last month, while stating that his company would "stay agile and keep moving on" and do "whatever's in the best interest of our country."

Nvidia declined to comment.

JOSH EDELSON/AFP via Getty Images

It's not uncommon for AI companies to fear that Nvidia will swoop in and make their work redundant. But when it happened to Tuhin Srivastava, he was perfectly calm.

"This is the thing about AI — you gotta burn the boats," Srivastava, the cofounder of AI inference platform Baseten, told Business Insider. He hasn't burned his quite yet, but he's bought the kerosene.

The story goes back to when DeepSeek took the AI world by storm at the beginning of this year. Srivastava and his team had been working with the model for weeks, but it was a struggle.

The problem was a tangle of AI jargon, but essentially, inference, the computing process that happens when AI generates outputs, needed to be scaled up to quickly run these big, complicated, reasoning models.

Multiple elements were hitting bottlenecks and slowing down delivery of the model responses, making it a lot less useful for Baseten's customers, who were clamoring for access to the model.

Srivastava's company has access to Nvidia's H200 chips — the best, widely available chip that could handle the advanced model at the time — but Nvidia's inference platform was glitching.

A software stack called Triton Inference Server was getting bogged down with all the inference required for DeepSeek's reasoning model R1, Srivastava said. So Baseten built their own, which they still use now.

Then, in March, Jensen Huang took to the stage at the company's massive GTC conference and launched a new inference platform: Dynamo.

Dynamo is open-source software that helps Nvidia chips handle the intensive inference used for reasoning models at scale.

"It is essentially the operating system of an AI factory," Huang said onstage.

"This was where the puck was going," Srivastava said. And Nvidia's arrival wasn't a surprise. When the juggernaut inevitably surpasses Baseten's equivalent platform, the small team will abandon what they built and switch, Srivastava said.

He expects it will take a couple of months max.

It's not just Nvidia making tools with its massive team and research and development budget to match. Machine learning is constantly evolving. Models get more complex and require more computing power and engineering genius to work at scale, and then they shrink again when those engineers find new efficiencies and the math changes. Researchers and developers are balancing cost, time, accuracy, and hardware inputs, and every change reshuffles the deck.

"You cannot get married to a particular framework or a way of doing things," said Karl Mozurkewich, principal architect at cloud firm Valdi.

"This is my favorite thing about AI," said Theo Brown, a YouTuber and developer whose company, Ping, builds AI software for other developers. "It makes these things that the industry has historically treated as super valuable and holy, and just makes them incredibly cheap and easy to throw away," he told BI.

Browne spent the early years of his career coding for big companies like Twitch. When he saw a reason to start over on a coding project instead of building on top of it, he faced resistance, even when it would save time or money. Sunk cost fallacy reigned.

"I had to learn that rather than waiting for them to say, 'No,' do it so fast they don't have the time to block you," Browne said.

That's the mindset of many bleeding-edge builders in AI.

It's also often what sets startups apart from large enterprises.

Quinn Slack, CEO of AI coding platform Sourcegraph, frequently explains this to his customers when he meets with Fortune 500 companies that may have built their first AI round on shaky foundations.

" I would say 80% of them get there in an hourlong meeting," he said.

Ben Miller, CEO of real estate investment platform Fundrise, is building an AI product for the industry, and he doesn't worry too much about the latest model. If a model works for its purpose, it works, and moving up to the latest innovation is unlikely to be worth the engineers' hours.

"I'm sticking with what works well enough for as long as I can," he said. That's in part because Miller has a large organization, but it's also because he's building things farther up the stack.

That stack consists of hardware at the bottom, usually Nvidia's GPUs, and then layers upon layers of software. Baseten is a few layers up from Nvidia. The AI models, like R1 and GPT-4o, are a few layers up from Baseten. And Miller is just about at the top where consumers are.

"There's no guarantee you're going to grow your customer base or your revenue just because you're releasing the latest bleeding-edge feature," Mozurkewich said. "When you're in front of the end-user, there are diminishing returns to moving fast and breaking things."

Have a tip? Contact this reporter via email at [email protected] or Signal at 443-333-9088. Use a personal email address and a nonwork device; here's our guide to sharing information securely.

Tingshu Wang/Reuters

Banning the export of Nvidia chips is unlikely to stymie China's development of advanced AI, according to Bernstein analysts.

Nvidia notified investors in a new regulatory filing last week that it expects the Trump administration to require a license for exporting the type of powerful semiconductors used to build AI products to China. Analysts widely interpreted the license requirement as an export ban.

The US chip firm said it would incur $5.5 billion in charges related to inventory, purchase commitments, and reserves for its H20 chip model in the first quarter, which ends on April 27.

Nvidia designed its H20 chip to exactly fit with Biden administration limits on the power of chips that could be sold to Chinese companies, the aim of which was to curb China's AI progress. (A new congressional inquiry takes issue with this reaction to the regulations.)

"Banning the H20 would make no sense as its performance is already well below Chinese alternatives; a ban would simply hand the Chinese AI market completely over to Huawei," Bernstein analysts wrote in a note to investors Wednesday.

Chinese companies have been reducing their reliance on Nvidia chips, according to the analysts. To do so they have found ways to perform model training on unrestricted edge devices, like personal computers and laptops. They've also moved much of the inference workloads, the AI-generated responses and actions, to Nvidia alternatives.

Chinese companies have also engineered ways for chips designed by their homegrown tech giant, Huawei, or other locally made chips, and Nvidia chips to be networked together, though software remains a challenge in fully converting from chip to chip.

"Our channel checks have shown that most companies are able to carry on without H20 chips," the analysts wrote.

Chinese companies with revenue from foundation model subscriptions — similar to US firms OpenAI or Anthropic — will have the hardest time converting from Nvidia chips to alternatives, since training models is more dependent on Nvidia's proprietary software CUDA.

One Chinese company required 200 engineers and six months to move a model from the Nvidia platform to Huawei chips, and it still only reached 90% of the previous performance, according to Bernstein.

Huawei presents the most formidable challenge to Nvidia in China.

"In the longer run, expect Huawei to keep closing the gap in performance and Chinese foundational models making up for compute deficiency with Deepseek-like innovation," the analysts wrote.

Chip supply, though, is likely to be constrained for the foreseeable future, they added, as Huawei, like most major players in the AI chips game, is somewhat dependent on production from Taiwan Semiconductor Manufacturing Company.

Brendan McDermid/REUTERS

Amazon, like many other tech companies, has grappled with significant GPU shortages in recent years.

To address the problem, it created eight "tenets," or guiding principles, for approving employee graphics processing unit requests, according to an internal document seen by Business Insider.

These tenets are part of a broader effort to streamline Amazon's internal GPU distribution process. Last year, Amazon launched "Project Greenland," which one document called a "centralized GPU orchestration platform," to more efficiently allocate capacity across the company. It also pushed for tighter controls by prioritizing return on investment for each AI chip.

As a result, Amazon is no longer facing a GPU crunch, which strained the company last year.

"Amazon has ample GPU capacity to continue innovating for our retail business and other customers across the company," an Amazon spokesperson told BI. "AWS recognized early on that generative AI innovations are fueling rapid adoption of cloud computing services for all our customers, including Amazon, and we quickly evaluated our customers' growing GPU needs and took steps to deliver the capacity they need to drive innovation."

Here are the eight tenets for GPU allocation, according to the internal Amazon document:

- ROI + High Judgment thinking is required for GPU usage prioritization. GPUs are too valuable to be given out on a first-come, first-served basis. Instead, distribution should be determined based on ROI layered with common sense considerations, and provide for the long-term growth of the Company's free cash flow. Distribution can happen in bespoke infrastructure or in hours of a sharing/pooling tool.

- Continuously learn, assess, and improve: We solicit new ideas based on continuous review and are willing to improve our approach as we learn more.

- Avoid silo decisions: Avoid making decisions in isolation; instead, centralize the tracking of GPUs and GPU related initiatives in one place.

- Time is critical: Scalable tooling is a key to moving fast when making distribution decisions which, in turn, allows more time for innovation and learning from our experiences.

- Efficiency feeds innovation: Efficiency paves the way for innovation by encouraging optimal resource utilization, fostering collaboration and resource sharing.

- Embrace risk in the pursuit of innovation: Acceptable level of risk tolerance will allow to embrace the idea of 'failing fast' and maintain an environment conducive to Research and Development.

- Transparency and confidentiality: We encourage transparency around the GPU allocation methodology through education and updates on the wiki's while applying confidentiality around sensitive information on R&D and ROI sharable with only limited stakeholders. We celebrate wins and share lessons learned broadly.

- GPUs previously given to fleets may be recalled if other initiatives show more value. Having a GPU doesn't mean you'll get to keep it.

Do you work at Amazon? Got a tip? Contact this reporter via email at [email protected] or Signal, Telegram, or WhatsApp at 650-942-3061. Use a personal email address and a nonwork device; here's our guide to sharing information securely.

Daniil Dubov/Getty, Tyler Le/BI

Last year, Amazon's huge retail business had a big problem: It couldn't get enough AI chips to get crucial work done.

With projects getting delayed, the Western world's largest e-commerce operation launched a radical revamp of internal processes and technology to tackle the issue, according to a trove of Amazon documents obtained by Business Insider.

The initiative offers a rare inside look at how a tech giant balances internal demand for these GPU components with supply from Nvidia and other industry sources.

Early in 2024, the generative AI boom was in full swing, with thousands of companies vying for access to the infrastructure needed to apply this powerful new technology.

Inside Amazon, some employees went months without securing GPUs, leading to delays that disrupted timely project launches across the company's retail division, a sector that spans its e-commerce platform and expansive logistics operations, according to the internal documents.

In July, Amazon launched Project Greenland, a "centralized GPU capacity pool" to better manage and allocate its limited GPU supply. The company also tightened approval protocols for internal GPU use, the documents show.

"GPUs are too valuable to be given out on a first-come, first-served basis," one of the Amazon guidelines stated. "Instead, distribution should be determined based on ROI layered with common sense considerations, and provide for the long-term growth of the Company's free cash flow."

Two years into a global shortage, GPUs remain a scarce commodity —even for some of the largest AI companies. OpenAI CEO Sam Altman, for example, said in February that the ChatGPT-maker was "out of GPUs," following a new model launch. Nvidia, the dominant GPU provider, has said it will be supply-constrained this year.

However, Amazon's efforts to tackle this problem may be paying off. By December, internal forecasts suggested the crunch would ease this year, with chip availability expected to improve, the documents showed.

In an email to BI, an Amazon spokesperson said the company's retail arm, which sources GPUs through Amazon Web Services, now has full access to the AI processors.

"Amazon has ample GPU capacity to continue innovating for our retail business and other customers across the company," the spokesperson said. "AWS recognized early on that generative AI innovations are fueling rapid adoption of cloud computing services for all our customers, including Amazon, and we quickly evaluated our customers' growing GPU needs and took steps to deliver the capacity they need to drive innovation."

Amazon

Amazon now demands hard data and return-on-investment proof for every internal GPU request, according to the documents obtained by BI.

Initiatives are "prioritized and ranked" for GPU allocation based on several factors, including the completeness of data provided and the financial benefit per GPU. Projects must be "shovel-ready," or approved for development, and prove they are in a competitive "race to market." They also have to provide a timeline for when actual benefits will be realized.

One internal document from late 2024 stated that Amazon's retail unit planned to distribute GPUs to the "next highest priority initiatives" as more supply became available in the first quarter of 2025.

The broader priority for Amazon's retail business is to ensure its cloud infrastructure spending generates the "highest return on investment through revenue growth or cost-to-serve reduction," one of the documents added.

Amazon's retail team codified its approach into official "tenets" — internal guidelines that individual teams or projects create for faster decision-making. The tenets emphasize strong ROI, selective approvals, and a push for speed and efficiency.

And if a greenlit project underdelivers, its GPUs can be pulled back.

Here are the 8 tenets for GPU allocation, according to one of the Amazon documents:

Amazon

To address the complexity of managing GPU supply and demand, Amazon launched a new project called Greenland last year.

Greenland is described as a "centralized GPU orchestration platform to share GPU capacity across teams and maximize utilization," one of the documents said.

It can track GPU usage per initiative, share idle servers, and implement "clawbacks" to reallocate chips to more urgent projects, the documents explained. The system also offers a simplified networking setup and security updates, while alerting employees and leaders to projects with low GPU usage.

This year, Amazon employees are "mandated" to go through Greenland to obtain GPU capacity for "all future demands," and the company expects this to increase efficiency by "reducing idle capacity and optimizing cluster utilization," it added.

Amazon's retail business is wasting no time putting its GPUs to work. One document listed more than 160 AI-powered initiatives, including the Rufus shopping assistant and Theia product image generator.

Other AI projects in the works include, per the document:

Last year, Amazon estimated that AI investments by its retail business indirectly contributed $2.5 billion in operating profits, the documents showed. Those investments also resulted in approximately $670 million in variable cost savings.

It's unclear what the 2025 estimates are for those metrics. But Amazon plans to continue spending heavily on AI.

As of early this year, Amazon's retail arm anticipated about $1 billion in investments for GPU-powered AI projects. Overall, the retail division expects to spend around $5.7 billion on AWS cloud infrastructure in 2025, up from $4.5 billion in 2024, the internal documents show.

Last year, Amazon's heavy slate of AI projects put pressure on its GPU supply.

Throughout the second half of 2024, Amazon's retail unit suffered a supply shortage of more than 1,000 P5 instances, AWS's cloud server that contains up to 8 Nvidia H100 GPUs, said one of the documents from December. The P5 shortage was expected to slightly improve by early this year, and turn to a surplus later in 2025, according to those December estimates.

Amazon's spokesperson told BI those estimates are now "outdated," and there's currently no GPU shortage.

AWS's in-house AI chip Trainium was also projected to satisfy the retail division's demand by the end of 2025, but "not sooner," one of the documents said.

Amazon's improving capacity aligns with Andy Jassy's remarks from February, when he said the GPU and server constraints would "relax" by the second half of this year.

But even with these efforts, there are signs that Amazon still worries about GPU supply.

A recent job listing from the Greenland team acknowledged that explosive growth in GPU demand has become this generation's defining challenge: "How do we get more GPU capacity?"

Do you work at Amazon? Got a tip? Contact this reporter via email at [email protected] or Signal, Telegram, or WhatsApp at 650-942-3061. Use a personal email address and a nonwork device; here's our guide to sharing information securely.

Nvidia announced plans today to manufacture AI chips and build complete supercomputers on US soil for the first time, commissioning over one million square feet of manufacturing space across Arizona and Texas. The politically timed move comes amid rising US-China tensions and the Trump administration's push for domestic manufacturing.

Nvidia's announcement comes less than two weeks after the Trump administration's chaotic rollout of new tariffs and just two days after the administration's contradictory messages on electronic component exemptions.

On Friday night, the US Customs and Border Protection posted a bulletin exempting electronics including smartphones, computers, and semiconductors from Trump's steep reciprocal tariffs. But by Sunday, Trump and his commerce secretary Howard Lutnick contradicted this move, claiming the exemptions were only temporary and that electronics would face new "semiconductor tariffs" in the coming months.

© Wong Yu Liang

Stephen Brashear/Getty Images

In the high-stakes race to dominate AI infrastructure, a tech giant has subtly shifted gears.

Since ChatGPT burst on the scene in late 2022, there's been a mad dash to build as many AI data centers as possible. Big Tech is spending hundreds of billions of dollars on land, construction, and computing gear to support new generative AI workloads.

Microsoft has been at the forefront of this, mostly through its partnership with OpenAI, the creator of ChatGPT.

For two years, there's been almost zero doubt in the tech industry about this AI expansion. It's been all very UP and to the right.

Until recently, that is.

Last Tuesday, Noelle Walsh, head of Microsoft Cloud Operations, said the company "may strategically pace our plans."

This is pretty shocking news for an AI industry that's been constantly kicking and screaming for more cloud capacity and more Nvidia GPUs. So it's worth reading closely what Walsh wrote about how things have changed:

"In recent years, demand for our cloud and AI services grew more than we could have ever anticipated and to meet this opportunity, we began executing the largest and most ambitious infrastructure scaling project in our history," she wrote in a post on LinkedIn.

"By nature, any significant new endeavor at this size and scale requires agility and refinement as we learn and grow with our customers. What this means is that we are slowing or pausing some early-stage projects," Walsh added.

She didn't share more details, but TD Cowen analyst Michael Elias has found several recent examples of what he said was Microsoft backing off.

The tech giant has walked away from more than 2 gigawatts of AI cloud capacity in both the US and Europe in the last six months that was in the process of being leased, he said. In the past month or so, Microsoft has also deferred and canceled existing data center leases in the US and Europe, Elias wrote in a recent note to investors.

This pullback on new capacity leasing was largely driven by Microsoft's decision to not support incremental OpenAI training workloads, Elias said. A recent change to this crucial partnership allows OpenAI to work with other cloud providers beyond Microsoft.

"However, we continue to believe the lease cancellations and deferrals of capacity point to data center oversupply relative to its current demand forecast," Elias added.

This is worrying because trillions of dollars in current and planned investments are riding on the generative AI boom continuing at a rapid pace. With so much money on the line, any inkling that this rocket ship is not ascending at light speed is unnerving. (I asked a Microsoft spokesperson all about this twice, and didn't get a response.)

The reality is more nuanced than a simple pullback, though. What we're witnessing is a recalibration — not a retreat.

Barclays analyst Raimo Lenschow put the situation in context. The initial wave of this industry spending spree focused a lot on securing land and buildings to house all the chips and other computing gear needed to create and run AI models and services.

As part of this AI "land grab," it's common for large cloud companies to sign and negotiate leases that they end up walking away from later, Lenschow explained.

Now that Microsoft feels more comfortable with the amount of land it has on hand, the company is likely shifting some spending to the later stages that focus more on buying the GPUs and other computing gear that go inside these new data centers.

"In other words, over the past few quarters, Microsoft has 'overspent' on land and buildings, but is now going back to a more normal cadence," Lenschow wrote in a recent note to investors.

Microsoft still plans $80 billion in capital expenditures during its 2025 fiscal year and has guided for year-over-year growth in the next fiscal year. So, the company probably isn't backing away from AI much, but rather becoming more strategic about where and how it invests.

Part of the shift appears to be from AI training to inference. Pre-training is how new models are created, and this requires loads of closely connected GPUs, along with state-of-the-art networking. Expensive stuff! Inference is how existing models are run to support services such as AI agents and Copilots. Inference is less technically demanding but is expected to be the larger market.

With inference outpacing training, the focus is shifting toward scalable, cost-effective infrastructure that maximizes return on investment.

For instance, at a recent AI conference in New York, the discussion was focused more on efficiency rather than attaining AGI, or artificial general intelligence, a costly endeavor to make machines work better than humans.

AI startup Cohere noted that its new Command A model only needs two GPUs to run. That's a heck of a lot less than most models have required in recent years.

Mustafa Suleyman, CEO of Microsoft AI, echoed this in a recent podcast. While he acknowledged a slight slowdown in returns from massive pre-training runs, he emphasized that the company's compute consumption is still "unbelievable" — it's just shifting to different stages of the AI pipeline.

Suleyman also clarified that some of the canceled leases and projects were never finalized contracts, but rather exploratory discussions — part of standard operating procedure in hyperscale cloud planning.

This strategic pivot comes as OpenAI, Microsoft's close partner, has begun sourcing capacity from other cloud providers, and is even hinting at developing its own data centers. Microsoft, however, maintains a right of first refusal on new OpenAI capacity, signaling continued deep integration between the two companies.

First, don't mistake agility for weakness. Microsoft is likely adjusting to changing market dynamics, not scaling back ambition. Second, the hyperscaler space remains incredibly competitive.

According to Elias, when Microsoft walked away from capacity in overseas markets, Google stepped in to snap up the supply. Meanwhile, Meta backfilled the capacity that Microsoft left on the table in the US.

"Both of these hyperscalers are in the midst of a material year-over-year ramp in data center demand," Elias wrote, referring to Google and Meta.

So, Microsoft's pivot may be more a sign of maturity, than retreat. As AI adoption enters its next phase, the winners won't necessarily be those who spend the most — but those who spend the smartest.

Laura Italiano, RacingOne/Getty, Alan_Lagadu/Getty, Ava Horton/BI

A federal judge in Manhattan is about to wave the green starting flag on a fraud trial mixing NASCAR, a former hedge funder, and a Nvidia-backed tech stock that proved to be a come-from-behind winner.

Jury selection is scheduled for Monday in the trial, in which racing superfan and car collector, Andrew Franzone, 48, will fight securities and wire fraud charges.

Federal prosecutors say Franzone tricked more than 100 victims — including fellow drivers and racing fans — into investing a total of $40 million in his Miami hedge fund.

NASCAR is central to the case. Prosecutors say Franzone found his victims and spent his money at its speedways and vintage car shows.

"Franzone committed his crime by exploiting the networks and connections in the NASCAR community," from coast to coast and in England, prosecutors wrote last month.

Prosecutors say Franzone's victims include racing legend and 1995 NASCAR truck series champion Mike "The Gunslinger" Skinner. And they say Franzone used the fund to finance a flashy, high-octane racing lifestyle.

In 2015, Franzone illegally spent $565,000 in fund assets to purchase an airplane hangar just outside Daytona Beach, prosecutors say.

The hangar housed Franzone's prized vintage race car collection, including Skinner's series-winning Chevy truck and the Ford Galaxie that Fred "Golden Boy" Lorenzen drove to victory in the 1965 Daytona 500, according to a 2016 profile in the Wall Street Journal.

"It was the coolest sound I'd ever heard," Franzone told the Journal, describing being in his 20s and hearing the engine roar of "an old 1960s big block stock car" for the first time.

Prosecutors say the hangar was also home to Franzone's racing team, ATF & Gunslinger. Skinner was a celebrity driver for the team. So was five-time pro-wrestling world champion Bill Goldberg.

ATF & Gunslinger proved a success, winning races in the US and UK. In 2017, Hard Rock International, the global café and hotel chain, signed on as a sponsor.

Then, in 2019, investors became jittery, questioning Franzone's alleged lies about his fund's liquidity and performance, and — in the words of prosecutors — his "house of cards" collapsed.

Now a criminal defendant — who used the New York City subway to travel to his most recent court date — Franzone has fought, without luck, to keep any reference to NASCAR and race cars out of his trial.

At Franzone's last day in court, on April 7, defense attorney Joseph R. Corozzo argued that references to his client's spending on his racing hobby would be "unduly prejudicial."

It would be like telling jurors, "His spending is on race cars, so you should be offended," Corozzo argued.

In a ruling Thursday, US District Judge Vernon Broderick disagreed. The judge wrote that evidence of Franzone's spending and lifestyle "is probative of his motive to commit the fraud," and therefore fair game for jurors to hear about.

Franzone was so obsessed with maintaining his NASCAR lifestyle that even after his fund went bankrupt in 2019, he pocketed a $200,000 investment from one of his closest racing buddies, prosecutors allege.

He immediately spent $50,000 of the money on "luxury vehicles," prosecutors say.

Another $15,000 was allegedly diverted to "a romantic partner—" a Manhattan woman who, according to court filings, was "purportedly engaged in the business of delivering luxury pet gift baskets."

Franzone wired the now-former girlfriend $289,000 in fund assets between 2017 and 2019, prosecutors say.

It wasn't until nearly two years later when he was arrested, in 2021, at a palm-tree-shaded beachfront Westin in Fort Lauderdale where he'd been living for the previous year. His mother would end up paying his overdue $2,270 hotel bill, using his father's credit card, according to court documents.

Soon after his arrest, the hedge fund — which Franzone had been fighting for two years to keep afloat amid investor mutinees, bankruptcy proceedings, an SEC investigation, and a sea of litigation — was liquidated without Franzone's approval, by the bankruptcy trustee.

There's a twist in the track of this story, though, involving the NASCAR-worthy comeback of one of Franzone's investments.

Just why Franzone's "FF Fund" filed for bankruptcy in 2019 remains in dispute.

Prosecutors say it was because Franzone realized he couldn't pay back his investors — the vast majority of his fund's assets were risky, failing, or "illiquid" investments that could not quickly converted into cash. The defense says Franzone had hoped bankruptcy would protect the still-viable fund from a litigious former investor who was trying to dissolve it entirely.

Either way, a little over a year ago, the bankruptcy trustee liquidated — sold off — one of its investments and hit the jackpot.

Franzone had purchased 250,000 shares of a Nvidia-backed cloud computing company called CoreWeave for $250,000 in 2019.

The shares liquidated by the trustee sold for over $55 million.

"The investors will get more than their investments back," Franzone's Miami bankruptcy attorney told the judge in that case last year.

"The fund seems not to be insolvent. To the contrary, it's done amazing because of the investment that was made in the company CoreWeave."

In Franzone's defense, his lawyers argue that investors were warned in writing that any investment comes with risk. They were also told the fund would use any investment techniques Franzone felt were appropriate.

And in the case of CoreWeave, all of the investors who stuck with the fund — instead of writing their investment off on their taxes as a capital loss — have made money. And that includes Franzone and his own parents and brother, who remain investors, according to court records.

Prosecutors countered in a filing last month that it matters not a whit if CoreWeave did well.

"Whether those investors ultimately recouped their losses years later, and only after FF Fund filed for bankruptcy, does not alter the fact that Franzone lied to them, deceived them, and misused their funds as part of his scheme," prosecutors wrote the judge.

Franzone had hoped to argue that the success of his investment in CoreWeave proves that he never intended to defraud anyone and that investors will ultimately recoup any losses.

On Thursday, the judge sided with prosecutors and barred the defense from making this argument.

An attorney for Franzone and a spokesperson for the US Attorney's Office did not respond to requests for comment left Friday afternoon.

The trial is expected to last three weeks. If convicted, Franzone faces a maximum sentence of 20 years in prison.

Michael M. Santiago/Getty Images

CoreWeave listed on the Nasdaq Friday amid a shifting narrative and much anticipation. The company priced its IPO at $40 per share. The stock flailed, opening at $39 per share, then falling as much as 6% and ending the day back up at $41.59.

The cloud firm, founded in 2017, is the first pure-play AI public offering in the US. CoreWeave buys graphics processing units from Nvidia and then pairs them with software to give companies an easy way to access the GPUs and achieve the top performance of their AI products and services.

The company's financial future is dependent on two unknowns — that the use and usefulness of AI will grow immensely, and that those workloads will continue to run on Nvidia GPUs.

It's no wonder that the listing has often been described as a bellwether for the entire AI industry.

But CoreWeave's specific business has some contours that could be responsible for Friday's ambivalent debut without passing judgment on AI as a whole.

CoreWeave customers are highly concentrated and its suppliers are even more so. The company is highly leveraged, with billions in debt, collateralized by GPUs. The future obsolescence of those GPUs is looming.

Umesh Padval, managing director of Thomvest expects the pricing for the GPU computing CoreWeave offers to go down in the next 12 to 18 months as GPU supply continues to improve, which could challenge the company's future profitability.

"In general, it's not a bellwether in my opinion," Padval told Business Insider.

So what does it mean that CoreWeave's debut didn't rise to meet hopes and expectations?

Karl Mozurkewich, principal architect at cloud firm Valdi told BI the Friday IPO is more of a test for the neocloud concept than for AI. Neoclouds are a term used to describe young public cloud providers that solely focus on accelerated computing. They often use Nvidia's preferred reference architecture and, in theory, demonstrate the best possible performance for Nvidia's hardware.

Nvidia CEO Jensen Huang gave the buch a shoutout at the company's tentpole GTC conference last week.

"What they do is just one thing. They host GPUs," Huang said to an audience of nearly 18,000. "They call themselves GPU clouds, and one of our great partners, CoreWeave, is in the process of going public and we're super proud of them."

CoreWeave's public market performance will signal what shape the future could take for these companies, according to Mozurkewich. Will more companies try to replicate the GPU-cloud model? Will Nvidia seed more similar businesses? Will it continue to give neoclouds early access to new hardware?

"I think the industry is very interested to see if the shape of CoreWeave is long-term successful," Mozurkewich said.

Daniel Newman, CEO of the Futurum Group, told BI that CoreWeave is "one measuring point of the AI trade; it isn't entirely indicative of the overall AI market or AI demand." He added the company has the opportunity to improve its fate as AI scales and the customer base grows and diversifies.

Lucas Keh, Semiconductors Analyst at Third Bridge agreed.

"Currently, more than 70% of CoreWeave's revenue comes from hyperscalers, but our experts expect this concentration to decrease 1—2 years after an IPO as the company diversifies its customer base beyond public cloud customers," Keh said via email.

Having a handful of large, dominant enterprise customers is not uncommon for a young provider like CoreWeave, Mozurkewich said. But it's also no surprise that it could concern investors.

"This is where CoreWeave has a chance to shine as AI and the demand for AI spans beyond the big 7 to 10 names. The caveat will be how stable GPU prices are as availability increases and competition increases," Newman said.

Other issues, like obsolescence, the necessary depreciation, and leverage will be harder to shake.

Have a tip or an insight to share? Contact Emma at [email protected] or use the secure messaging app Signal: 443-333-9088

Bruno de Carvalho/SOPA Images/LightRocket via Getty Images

I recently wrote about Nvidia's latest AI chip-and-server package and how this new advancement may dent the value of the previous product.

Nvidia's new offering likely caused Amazon to reduce the useful life of its AI servers, which took a big chunk out of earnings.

Other Big Tech companies, such as Microsoft, Google, and Meta, may have to take similar tough medicine, according to analysts.

This issue might also impact Nvidia-backed CoreWeave, which is doing an IPO. Its shares listed on Friday under the ticker "CRWV." The company is a so-called neocloud, specializing in generative AI workloads that rely mostly on Nvidia GPUs and servers.

Like its bigger rivals, CoreWeave has been buying oodles of Nvidia GPUs and renting them out over the internet. The startup had deployed more than 250,000 GPUs by the end of 2024, per its filing to go public.

These are incredibly valuable assets. Tech companies and startups have been jostling for the right to buy Nvidia GPUs in recent years, so any company that has amassed a quarter of a million of these components has done very well.

There's a problem, though. AI technology is advancing so rapidly that it can make existing gear obsolete, or at least less useful, more quickly. This is happening now as Nvidia rolls out its latest AI chip-and-server package, Blackwell. It's notably better than the previous version, Hopper, which came out in 2022.

Veteran tech analyst Ross Sandler recently shared a chart showing that the cost of renting older Hopper-based GPUs has plummeted as the newer Blackwell GPUs become more available.

Ross Sandler/Barclays Capital

The majority of CoreWeave's deployed GPUs are based on the older Hopper architecture, according to its latest IPO filing from March 20.

Sometimes, in situations like this, companies have to adjust their financials to reflect the quickly changing product landscape. This is done by reducing the estimated useful life of the assets in question. Then, through depreciation, the value of assets is reduced over a short time period to reflect things like wear and tear and, ultimately, obsolescence. The faster the depreciation, the bigger the hit to earnings.

Amazon, the world's largest cloud provider, just did this. On a recent conference call with analysts, the company "observed an increased pace of technology development, particularly in the area of artificial intelligence and machine learning."

That caused Amazon Web Services to decrease the useful life of some of its servers and networking gear from six years to five years, beginning in January.

Sandler, the Barclays analyst, thinks other tech companies may have to do the same, which could cut operating income by billions of dollars.

Will CoreWeave have to do the same, just as it's trying to pull off one of the biggest tech IPOs in years?

I asked a CoreWeave spokeswoman about this, but she declined to comment. This is not unusual, because companies in the midst of IPOs have to follow strict rules that limit what they can say publicly.

CoreWeave talks about this issue in its latest IPO filing, writing that the company is always upgrading its platform, which includes replacing old equipment.

"This requires us to make certain estimates with respect to the useful life of the components of our infrastructure and to maximize the value of the components of our infrastructure, including our GPUs, to the fullest extent possible."

The company warned those estimates could be inaccurate. CoreWeave said its calculations involve a host of assumptions that could change and infrastructure upgrades that might not go according to plan — all of which could affect the company, now and later.

This caution is normal because companies have to detail everything that could hit their bottom line, from pandemics to cybersecurity attacks.

As recently as January 2023, CoreWeave was taking the opposite approach to this situation, according to its IPO filing. The company increased the estimated useful life of its computing gear from five years to six years. That change reduced expenses by $20 million and boosted earnings by 10 cents a share for the 2023 year.

If the company now follows AWS and reduces the useful life of its gear, that might dent earnings. Again, CoreWeave's spokeswoman declined to comment, citing IPO rules.

An important caveat: Just because one giant cloud provider made an adjustment like this, it doesn't mean others will have to do the same. CoreWeave might design its AI data centers differently, somehow making Nvidia GPU systems last longer or become less obsolete less quickly, for instance.

It's also worth noting that other big cloud companies, including Google, Meta, and Microsoft, have increased the estimated useful life of their data center equipment in recent years.

Google and Microsoft's current estimates are six years, like CoreWeave's, while Meta's is 5.5 years.

However, Sandler, the Barclays analyst, thinks some of these big companies will follow AWS and shorten these estimates.

Chip Somodevilla/Getty Images

Nvidia CEO Jensen Huang made a joke this week that his biggest customers probably won't find funny.

"I said before that when Blackwell starts shipping in volume, you couldn't give Hoppers away," he said at Nvidia's big AI conference Tuesday.

"There are circumstances where Hopper is fine," he added. "Not many."

He was talking about Nvidia's latest AI chip-and-server package, Blackwell. It's notably better than the previous version, Hopper, which came out in 2022.

Big cloud companies, such as Amazon, Microsoft, and Google, buy a ton of these GPU systems to train and run the giant models powering the generative AI revolution. Meta has also gone on a GPU spending spree in recent years.

These companies should be happy about an even more powerful GPU like Blackwell. It's generally great news for the AI community. But there's a problem, too.

When new technology like this improves at such a rapid pace, the previous versions become obsolete, or at least less useful, much faster.

This makes these assets less valuable, so the big cloud companies may have to adjust. This is done through depreciation, where the value of assets are reduced over time to reflect things like wear and tear and ultimately obsolescence. The faster the depreciation, the bigger the hit to earnings.

Ross Sandler, a top tech analyst at Barclays, warned investors on Friday that the big cloud companies and Meta will probably have to make these adjustments, which could significantly reduce profits.

"Hyperscalers are likely overstating earnings," he wrote.

Google and Meta did not respond to Business Insider's questions about this on Friday. Microsoft declined to comment.

Take the example of Amazon Web Services, the largest cloud provider. In February, it became the first to take the pain.

CFO Brian Olsavsky said on Amazon's earnings call last month that the company "observed an increased pace of technology development, particularly in the area of artificial intelligence and machine learning."

"As a result, we're decreasing the useful life for a subset of our servers and networking equipment from 6 years to 5 years, beginning in January 2025," Olsavsky said, adding that this will cut operating income this year by about $700 million.

Then, more bad news: Amazon "early-retired" some of its servers and network equipment, Olsavsky said, adding that this "accelerated depreciation" cost about $920 million and that the company expects it will decrease operating income in 2025 by about $600 million.

Sandler, the Barclays analyst, included a striking chart in his research note on Friday. It showed the cost of renting H100 GPUs, which use Nvidia's older Hopper architecture. As you can see, the price has plummeted as the company's new, better Blackwell GPUs became more available.

Ross Sandler/Barclays Capital

"This could be a much larger problem at Meta and Google and other high-margin software companies," Sandler wrote.

For Meta, he estimated that a one-year reduction in the useful life of the company's servers would increase depreciation in 2026 by more than $5 billion and chop operating income by a similar amount.

For Google, a similar change would knock operating profit by $3.5 billion, Sandler estimated.

An important caveat: Just because one giant cloud provider has already made an adjustment like this, it doesn't mean the others will have to do exactly the same thing. Some companies might design their AI data centers differently, somehow making Nvidia GPU systems last longer or become less obsolete less quickly.

When the generative AI boom was picking up steam in the summer of 2023, Bernstein analysts already started worrying about this depreciation.

"All those Nvidia GPUs have to be going somewhere. And just how quickly do these newer servers depreciate? We've heard some worrying timetables," they wrote in a note to investors at the time.

One Bernstein analyst, Mark Shmulik, discussed this with my colleague Eugene Kim.

"I'd imagine the tech companies are paying close attention to GPU useful life, but I wouldn't expect anyone to change their depreciation timetables just yet," he wrote in an email to BI at the time.

Now, that time has come.

During Tuesday's Nvidia GTX keynote, CEO Jensen Huang unveiled two so-called "personal AI supercomputers" called DGX Spark and DGX Station, both powered by the Grace Blackwell platform. In a way, they are a new type of AI PC architecture specifically built for running neural networks, and five major PC manufacturers will build them.

These desktop systems, first previewed as "Project DIGITS" in January, aim to bring AI capabilities to developers, researchers, and data scientists who need to prototype, fine-tune, and run large AI models locally. DGX systems can serve as standalone desktop AI labs or "bridge systems" that allow AI developers to move their models from desktops to DGX Cloud or any AI cloud infrastructure with few code changes.

Huang explained the rationale behind these new products in a news release, saying, "AI has transformed every layer of the computing stack. It stands to reason a new class of computers would emerge—designed for AI-native developers and to run AI-native applications."

© https://nvidianews.nvidia.com/news/nvidia-announces-dgx-spark-and-dgx-station-personal-ai-computers

On Tuesday at Nvidia's GTC 2025 conference in San Jose, California, CEO Jensen Huang revealed several new AI-accelerating GPUs the company plans to release over the coming months and years. He also revealed more specifications about previously announced chips.

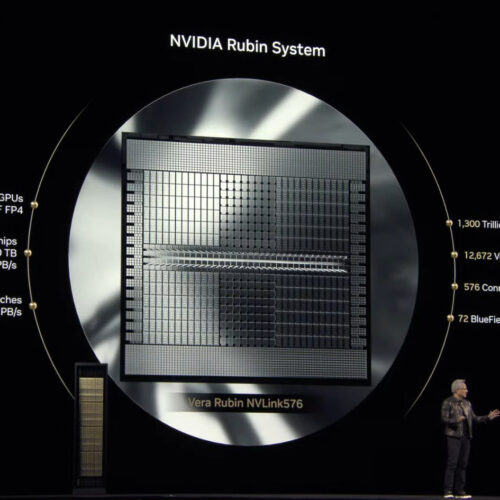

The centerpiece announcement was Vera Rubin, first teased at Computex 2024 and now scheduled for release in the second half of 2026. This GPU, named after a famous astronomer, will feature 288 gigabytes of memory and comes with a custom Nvidia-designed CPU called Vera.

According to Nvidia, Vera Rubin will deliver significant performance improvements over its predecessor, Grace Blackwell, particularly for AI training and inference.

Onstage at Nvidia’s GTC 2025 conference in San Jose on Tuesday, CEO Jensen Huang announced a slew of new GPUs coming down the company’s product pipeline over the next few months. Perhaps the most significant is Vera Rubin. Vera Rubin, which is set to be released in the second half of 2026, will feature tens […]

© 2024 TechCrunch. All rights reserved. For personal use only.