Researchers figure out how to get fresh lithium into batteries

As the owner of a 3-year-old laptop, I feel the finite lifespan of lithium batteries acutely. It's still a great machine, but the cost of a battery replacement would take me a significant way down the path of upgrading to a newer, even greater machine. If only there were some way to just plug it in overnight and come back to a rejuvenated battery.

While that sounds like science fiction, a team of Chinese researchers has identified a chemical that can deliver fresh lithium to well-used batteries, extending their life. Unfortunately, getting it to work requires that the battery has been constructed with this refresh in mind. Plus it hasn't been tested with the sort of lithium chemistry that is commonly used in consumer electronics.

Finding the right chemistry

The degradation of battery performance is largely a matter of its key components gradually dropping out of use within the battery. Through repeated cyclings, bits of electrodes fragment and lose contact with the conductors that collect current, while lithium can end up in electrically isolated complexes. There's no obvious way to re-mobilize these lost materials, so the battery's capacity drops. Eventually, the only way to get more capacity is to recycle the internals into a completely new battery.

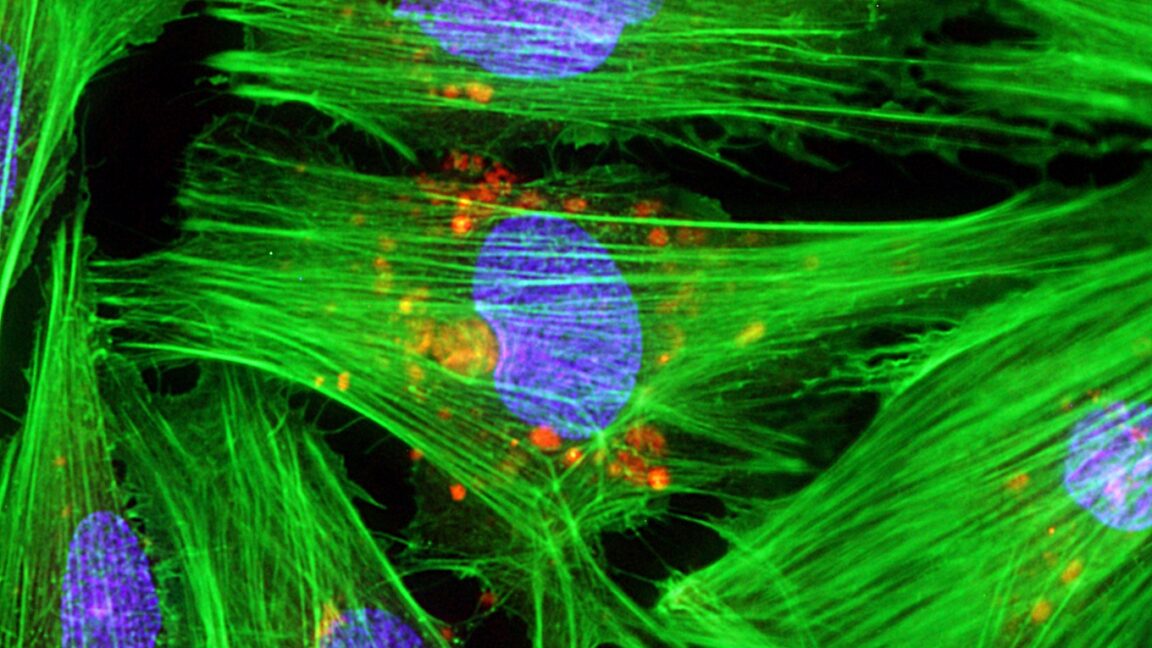

© Kinga Krzeminska