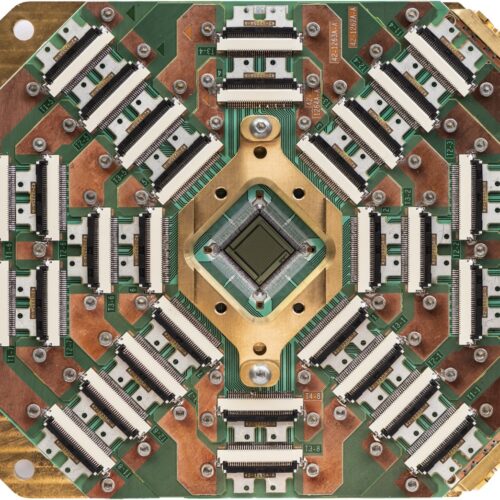

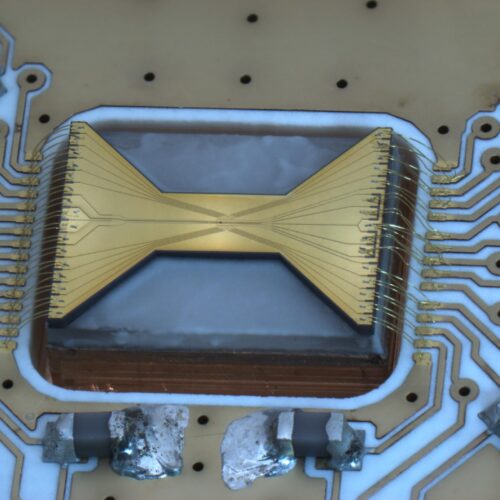

Startup puts a logical qubit in a single piece of hardware

Everyone in quantum computing agrees that error correction will be the key to doing a broad range of useful calculations. But early every company in the field seems to have a different vision of how best to get there. Almost all of their plans share a key feature: some variation on logical qubits built by linking together multiple hardware qubits.

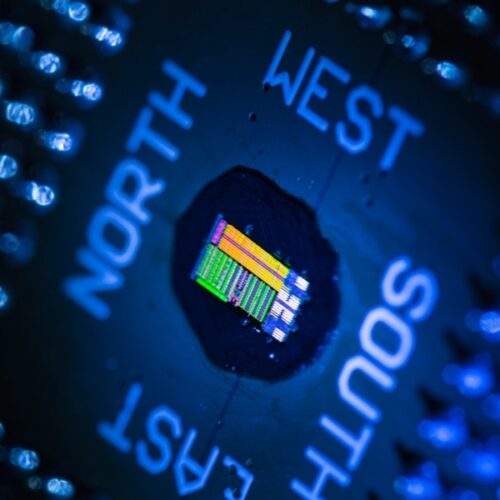

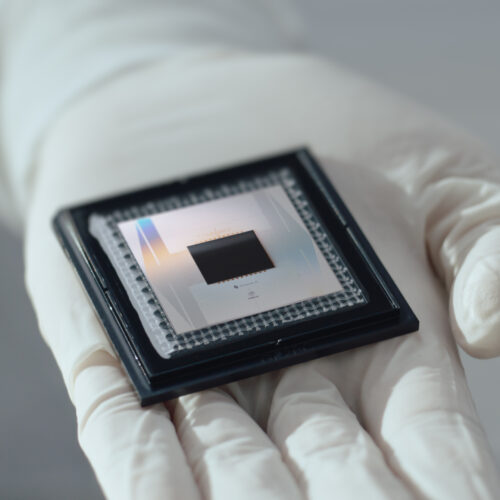

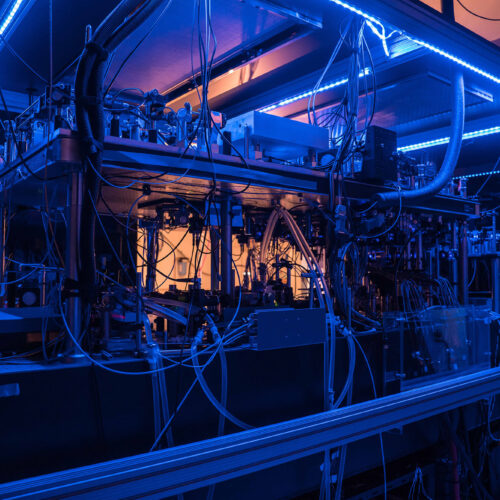

A key exception is Nord Quantique, which aims to dramatically cut the amount of hardware needed to support an error-corrected quantum computer. It does this by putting enough quantum states into a single piece of hardware, allowing each of those pieces to hold an error-corrected qubit. Last week, the company shared results showing that it could make hardware that used photons at two different frequencies to successfully identify every case where a logical qubit lost its state.

That still doesn't provide error correction, and they didn't use the logical qubit to perform operations. But it's an important validation of the company's approach.

© Nord Quantique