Nancy Mace’s Former Staff Claim She Had Them Create Burner Accounts to Promote Her

Getting the hang of through-hole soldering is tricky for those of us tinkering at home with our irons, spools, flux, and, sometimes, braids. It's almost reassuring, then, to learn that through-hole soldering was also a pain for a firm that has made more than 60 million products with it.

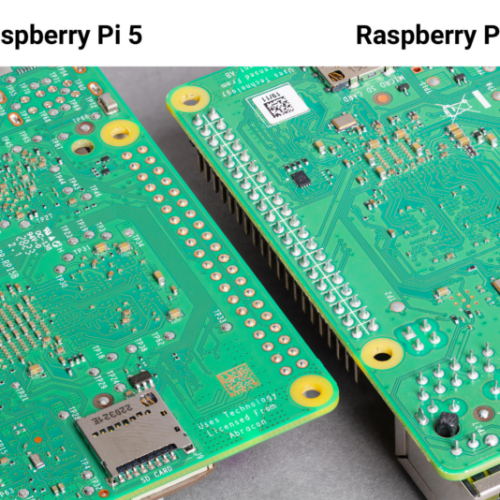

Raspberry Pi boards have a combination of surface-mount devices (SMDs) and through-hole bits. SMDs allow for far more tiny chips, resistors, and other bits to be attached to boards by their tiny pins, flat contacts, solder balls, or other connections. For those things that are bigger, or subject to rough forces like clumsy human hands, through-hole soldering is still required, with leads poked through a connective hole and solder applied to connect and join them securely.

The Raspberry Pi board has a 40-pin GPIO header on it that needs through-hole soldering, along with bits like the Ethernet and USB ports. These require robust solder joints, which can't be done the same way as with SMT (surface-mount technology) tools. "In the early days of Raspberry Pi, these parts were inserted by hand, and later by robotic placement," writes Roger Thornton, director of applications for Raspberry Pi, in a blog post. The boards then had to go through a follow-up wave soldering step.

© Raspberry Pi

Patrick T. Fallon / AFP

Silicon Valley needs bigger and better supercomputers to get bigger and better AI. We just got an idea of what they might look like by the end of the decade.

A new study published this week by researchers at the San Francisco-based institute Epoch AI said supercomputers — massive systems stacked with chips to train and run AI models — could need the equivalent of nine nuclear reactors by 2030 to keep them chugging along.

Epoch AI estimates that if supercomputer power requirements continue to roughly double each year, as they have done since 2019, the top machines would need about 9GW of power.

That's about the amount needed to keep the lights on in a city of roughly 7 to 9 million homes. Today's most powerful supercomputer needs around 300MW of power, which is "equivalent to about 250,000 households."

This makes the potential power needs of future supercomputers look extraordinary. There are a few reasons the next generation of computing seems so demanding.

One explanation is that they will simply be bigger. According to Epoch AI's paper, the leading AI supercomputer in 2030 could require 2 million AI chips and cost $200 billion to build — again, assuming that current growth trends continue.

For context, today's largest supercomputer — the Colossus system, built to full scale within 214 days by Elon Musk's xAI — is estimated to have cost $7 billion to make, and, per the company's website, is stacked with 200,000 chips.

As they grew in performance, AI supercomputers got exponentially more expensive. The upfront hardware cost of leading AI supercomputers doubled roughly every year (1.9x/year). We estimate the hardware for xAI's Colossus cost about $7 billion. pic.twitter.com/6AFCxjeZFJ

— Epoch AI (@EpochAIResearch) April 23, 2025

Companies have been looking to secure more chips to provide the computing power needed for increasingly powerful models as they race toward developing AI that surpasses human intelligence.

OpenAI, for instance, started the year with a huge supercomputer announcement as it unveiled Stargate, a project worth over $500 billion in investment over four years aimed at building critical AI infrastructure that includes a "computing system."

Epoch AI explains this growth by stating that where once supercomputers were used just as research tools, they're now being used as "industrial machines delivering economic value."

Having AI and supercomputers deliver economic value isn't just a priority for CEOs trying to justify exorbitant capital expenditures, either.

Earlier this month, President Donald Trump took to Truth Social to celebrate a $500 billion investment from Nvidia to build AI supercomputers in the US. It's "big and exciting news," he said, branding the announcement a commitment to "the Golden Age of America."

However, as Epoch AI's research suggests — research based on a dataset that covers "about 10% of all relevant AI chips produced in 2023 and 2024 and about 15% of the chip stocks of the largest companies at the start of 2025" — this would all come with greater power demands.

Epoch AI did note that "AI supercomputers are improving in energy efficiency, but the shift is not quickly enough to offset overall power growth." It also explains why companies like Microsoft, Google, and others have been looking to nuclear power as an alternative to their energy needs.

If the AI trend continues to grow, expect supercomputers to keep growing with it.