AI video just took a startling leap in realism. Are we doomed?

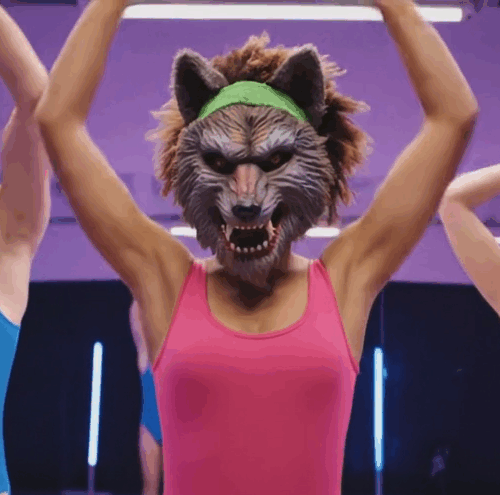

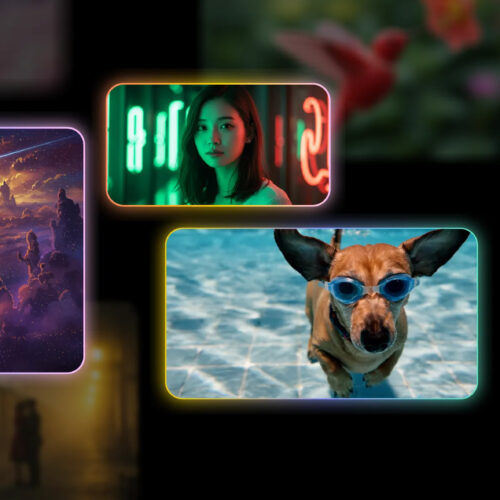

Last week, Google introduced Veo 3, its newest video generation model that can create 8-second clips with synchronized sound effects and audio dialog—a first for the company's AI tools. The model, which generates videos at 720p resolution (based on text descriptions called "prompts" or still image inputs), represents what may be the most capable consumer video generator to date, bringing video synthesis close to a point where it is becoming very difficult to distinguish between "authentic" and AI-generated media.

Google also launched Flow, an online AI filmmaking tool that combines Veo 3 with the company's Imagen 4 image generator and Gemini language model, allowing creators to describe scenes in natural language and manage characters, locations, and visual styles in a web interface.

Both tools are now available to US subscribers of Google AI Ultra, a plan that costs $250 a month and comes with 12,500 credits. Veo 3 videos cost 150 credits per generation, allowing 83 videos on that plan before you run out. Extra credits are available for the price of 1 cent per credit in blocks of $25, $50, or $200. That comes out to about $1.50 per video generation. But is the price worth it? We ran some tests with various prompts to see what this technology is truly capable of.