'Save Our Signs' Wants to Save the Real History of National Parks Before Trump Erases It

Data preservationists and archivists have been working tirelessly since the election of President Donald Trump to save websites, data, and public information that’s being removed by the administration for promoting or even mentioning diversity. The administration is now targeting National Parks signs that educate visitors about anything other than “beauty” and “grandeur,” and demanding they remove signs that mention “negative” aspects of American history.

In March, Trump issued an executive order, titled “Restoring Truth and Sanity To American History,” demanding public officials ensure that public monuments and markers under the Department of the Interior’s jurisdiction never address anything negative about American history, past or present. Instead, Trump wrote, they should only ever acknowledge how pretty the landscape looks.

Last month, National Park Service directors across the country were informed that they must post surveys at informational sites that encourage visitors to report "any signs or other information that are negative about either past or living Americans or that fail to emphasize the beauty, grandeur, and abundance of landscapes and other natural features," as dictated in a May follow-up order from Interior Secretary Doug Burgum. QR codes started popping up on placards in national parks that take visitors to a survey that asks them to snitch on "any signs or other information that are negative about either past or living Americans or that fail to emphasize the beauty, grandeur, and abundance of landscapes and other natural features."

The orders demand that this “negative” content must be removed by September 17.

Following these orders, volunteer preservationists from Safeguarding Research & Culture and the Data Rescue Project launched Save Our Signs, a project that asks parks visitors to upload photos of placards, signage and monuments on public lands—including at national parks, historic sites, monuments, memorials, battlefields, trails, and lakeshores—to help preserve them if they’re removed from public view.

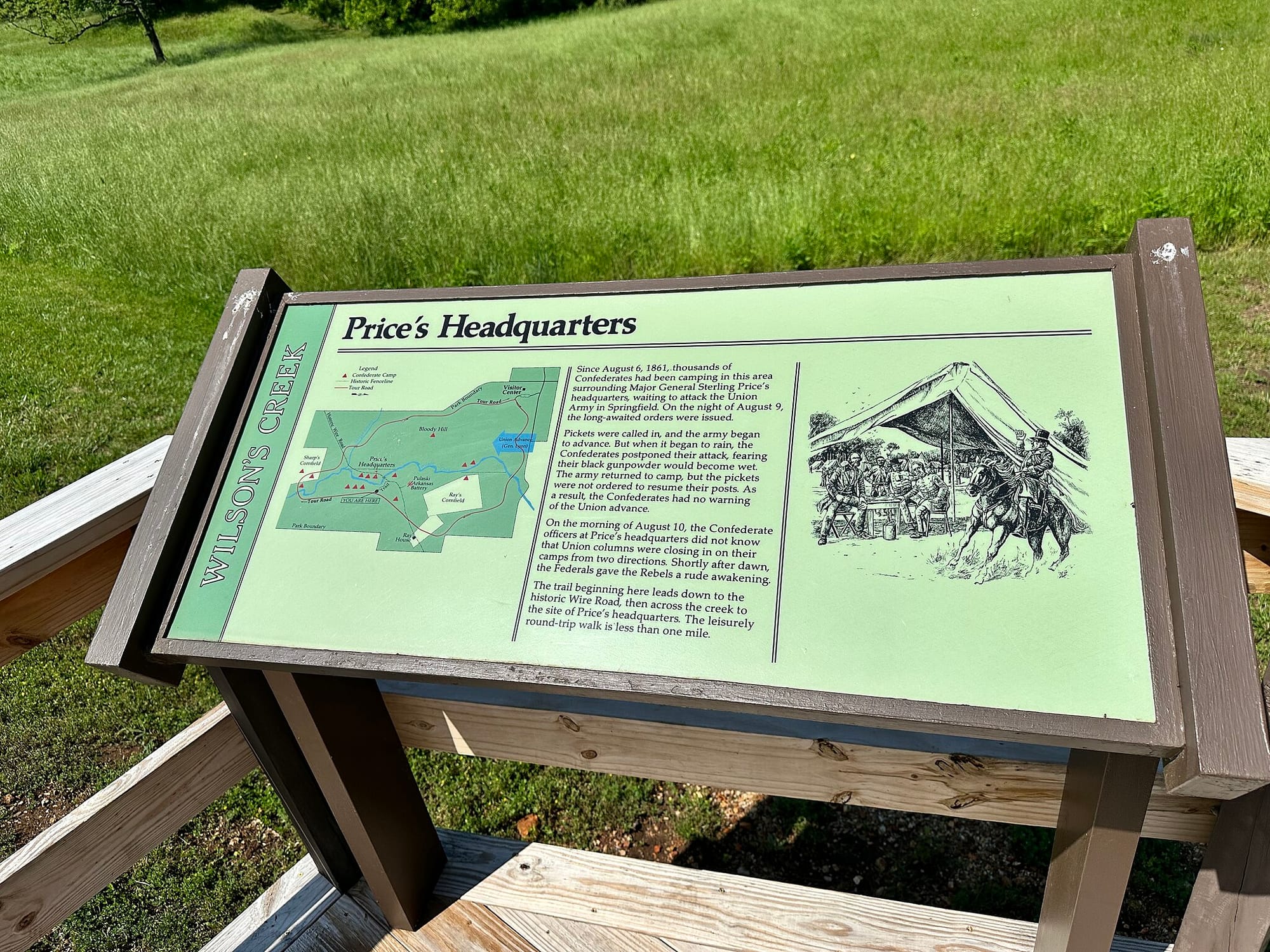

Trump and Burgum’s orders don’t give specific examples of content they’d deem negative. But the Colorado Sun reported that signs with the survey QR code appeared at the Amache National Historic Site in June; Amache was one of ten incarceration sites for Japanese Americans during World War II. NPR reported that a sign also appeared at the Civil War battlefield at Wilson's Creek in Missouri. Numerous sites across the country serve to educate visitors about histories that reflect fights for civil rights, recognize atrocities carried out by the U.S. government on Black and Indigenous people, or acknowledge contributions made by minority groups.

Lynda Kellam, a founding member of the Data Rescue Project and a data librarian at a university, told 404 Media that the group started discussing the project in mid-June, and partnered with another group at the University of Minnesota that was already working on a similar project. Jenny McBurney, the Government Publications Librarian at the University of Minnesota-Twin Cities, told 404 Media that conversations among her university network arose from the wider effort to preserve information being modified and removed on government websites.

In April, NIH websites, including repositories, including archives of cancer imagery, Alzheimer’s disease research, sleep studies, HIV databases, and COVID-19 vaccination and mortality data, were marked for removal and archivists scrambled to save them. In February, NASA website administrators were told to scrub their sites of anything that could be considered “DEI,” including mentions of indigenous people, environmental justice, and women in leadership. And in January, Github activity showed federal workers deleting and editing documents, employee handbooks, Slack bots, and job listings in an attempt to comply with Trump’s policies against diversity, equity, and inclusion.

“To me, this order to remove sign content that ‘disparages’ Americans is an extension of this loss of information that people rely on,” McBurney said. “The current administration is trying to scrub websites, datasets, and now signs in National Parks (and on other public lands) of words or ideas that they don't like. We're trying to preserve this content to help preserve our full history, not just a tidy whitewashed version.”

Save Our Signs launched the current iteration on July 3 and plan to make the collected photos public in October.

“In addition to being a data librarian, I am a trained historian specializing in American history. My research primarily revolves around anti-imperial and anti-lynching movements in the U.S.,” Kellam said. “Through this work, I've observed that the contributions of marginalized groups are frequently overlooked, yet they are pivotal to the nation's development. We need to have a comprehensive understanding of our history, acknowledging both its positive and problematic aspects. The removal of these signs would result in an incomplete and biased portrayal of our past.”

The Save Our Signs group plans to make the collected photos public in October. “It is essential that we make this content public and preserve all of this public interpretative material for the future,” McBurney said. “It was all funded with taxpayer money and it belongs to all of us. We’re worried that a lot of stuff could end up in the trash can and we want to make sure that we save a copy. Some of these signs might be outdated or wrong. But we don’t think the intent was to improve the accuracy of NPS interpretation. There has been SO much work over the last 30 years to widen the lens and nuance the interpretations that are shared in the national parks. This effort threatens all that by introducing a process to cull signs and messaging in a way that is not transparent.”

Trump has focused on gutting the Park Service since day one of his presidency: Since his administration took office, the National Park Service has lost almost a quarter of its permanent staff, according to the National Parks Conservation Association. On Thursday, Trump issued a new executive order that will raise entry fees to national parks for foreign tourists.

The “Restoring Truth and Sanity To American History” order states that Burgum must “ensure that all public monuments, memorials, statues, markers, or similar properties within the Department of the Interior’s jurisdiction do not contain descriptions, depictions, or other content that inappropriately disparage Americans past or living (including persons living in colonial times), and instead focus on the greatness of the achievements and progress of the American people or, with respect to natural features, the beauty, abundance, and grandeur of the American landscape.” Kyle Patterson, public affairs officer at Rocky Mountain National Park, told the Denver Post that these signs have been posted “in a variety of public-facing locations including visitor centers, toilet facilities, trailheads and other visitor contact points that are easily accessible and don’t impede the flow of traffic.”

Predictably, parks visitors are using the QR code survey to make their opinions heard. “This felonious Administration is the very definition of un-American. The parks belong to us, the people. ... Respectfully, GO **** YOURSELVES” one comment directed to Interior Secretary Doug Burgum said, in a leaked document provided to SFGATE by the National Parks Conservation Association.

“To maintain a democratic society, it is essential for the electorate to be well-informed, which includes having a thorough awareness of our historical challenges,” Kellam said. “This project combines our expertise as data librarians and preservationists and our concern for telling the full story of our country.”

“The point of history is not just to tell happy stories that make some people feel good. It's to help us understand how and why we got to this point,” McBurney said. “And National Parks sites have been chosen very carefully to help tell that broad and complicated history of our nation. If we just start removing things with no thought and consideration, we risk undermining all of that. We risk losing all kinds of work that has been done over so many years to help people understand the places they are visiting.”