Scientists Discover New World In Our Solar System: ‘Ammonite’

Welcome back to the Abstract! Here are the studies that made me smile, think, and despair for humanity this week.

First up, it’s officially a hot Jurassic summer with the recent release of yet another Mesozoic movie filled with de-extincted animals that are oddly preoccupied with human flesh. We’ll lead with a story about a fantastic Jurassic predator that didn’t make the cut for Jurassic World: Rebirth, but will eternally star in your nightmares hereafter.

Then: a whole new world, the horrific consequences of Medicaid cuts, and the cosmologies of ancient graveyards.

The case of the cursed ichthyosaur

Jaws, a summer blockbuster about how a rampaging shark can expose paradigms of masculinity, turned 50 years old last month. But if you want to meet a truly O.G. stealth ocean predator, you'll need to wind the clock back another 181 million years, according to a new study about Temnodontosaurus, a Jurassic predator that belongs to the extinct ichthyosaur family.

Scientists have discovered an exquisitely preserved front fin from this giant hunter, which grew to lengths of more than 30 feet. Unearthed in Germany, the fin includes a “wing-like” shape with “a serrated trailing edge” that probably evolved to reduce the sound it makes while sneaking up on its prey, according to researchers led by Johan Lindgren of Lund University.

183-million-year-old soft-tissue fossil (SSN8DOR11; Paläontologisches Museum Nierstein, Nierstein, Germany). Image: Randolph G. De La Garza, Martin Jarenmark and Johan Lindgren.

“The notably wing-like fin sheds light on the unique hunting strategy” of Temnodontosaurus, “revealing secondary control structures that probably served to minimize self-generated noise during foraging activities in low-light habitats—in effect, a novel form of stealth (silent swimming) in an ancient marine reptile,” the team said in the new study.

In other words, this animal had a silencer built into its fin, all the better to ambush fish, squid, reptiles, and other aquatic Jurassic delicacies. But wait—it gets creepier. Temnodontosaurus is most famous for its absolutely enormous eyeballs, with sockets that measured some 10 inches in diameter, potentially making them the biggest eyes of any animal that ever lived.

“A conspicuous feature of Temnodontosaurus is its huge eyeballs; these are the largest of any vertebrate known, rivaling those of the giant and colossal squid (of the genera Architeuthis and Mesocychoteuthis) in absolute size,” Lindgren and his colleagues said. “There is broad consensus that the eyes conferred advantages at low light levels, and thus were well suited either for nocturnal life or deep diving habits.”

Temnodontosaurus, staring at you from beyond the grave. Image: Ghedo, taken at the Paris Museum of Natural History

In Jaws, the shark hunter Quint, played by Robert Shaw, seems especially haunted by the eyes of sharks, describing them as “lifeless eyes, black eyes, like a doll's eyes” in his chilling firsthand account of the sinking of the U.S.S. Indianapolis.

But hey, I’ll take the doll’s eyes of a great white over the freakish monster gaze of Temnodontosaurus any day of the week (or geological epoch). What a relief that none of us will ever encounter this nighttime predator with its bus-length body, acoustic invisibility cloak, and pizza-pan peepers.

In other news…

New sednoid just dropped

Scientists have discovered a new world in the solar system: the trans-Neptunian object (TNO) 2023 KQ14, nicknamed Ammonite. The object is estimated to be about a hundred miles across and has an extreme orbit that takes it as far as 252 times the orbit of Earth. It belongs to a family of distant worlds called “sednoids” after the dwarf planet Sedna.

https://www.youtube.com/watch?v=z561PGgTe8I

“The discovery of ‘Ammonite’...offers a valuable opportunity to evaluate current models of outer Solar System formation and evolution,” said researchers led by Ying-Tung Chen of Academia Sinica in Taipei. “These findings highlight the diversity of orbital properties and dynamical behaviours among distant Solar System objects.”

As an interesting twist, this new world may be a strike against the idea that a giant hypothetical planet, popularly known as Planet Nine, is lurking in the outer reaches of the solar system. Its orbit doesn’t seem to line up with that theory. Time will probably tell, because Planet Nine—if it does exist—is running out of places to hide.

Medicaid cuts could cause thousands of excess deaths by 2034

There has been a lot of speculation about the extreme Medicaid cuts in the recently passed Big Beautiful Bill, but a new report summarizes the predicted effects with devastating brevity.

“CBO projections suggest 7.6 million individuals in the US would become uninsured by 2034 due to Medicaid policy changes” resulting in an annual increase of “approximately 1,484 excess deaths, 94,802 preventable hospitalizations” and “1.6 million people delaying care due to cost,” said researchers led by Sanjay Basu of the University of California, San Francisco.

This is a conservative estimate: In the higher-impact scenario where more than 14 million people lose Medicaid by 2034, annual impacts “are estimated be substantially greater: 2,284 excess deaths, 145,946 preventable hospitalizations [and] 2.5 million people delaying care,” according to the study.

This analysis doesn’t include the cutting of subsidies to the Affordable Care Act Marketplace plans or possible changes to Medicare, which will compound these negative effects. At the risk of sounding glib…seems bad!

Eternal sunsets for the Yangshao dead

You can tell a lot about a culture from the way it treats its living (see above) but also from the way it treats its dead.

With that in mind, Yuqing Chen of Durham University set out to better understand the Yangshao culture (仰韶) of central China, which spanned 4700–2800 BCE, by cataloging the orientations of graves of people buried at the Xipo and Qingliangsi burial grounds.

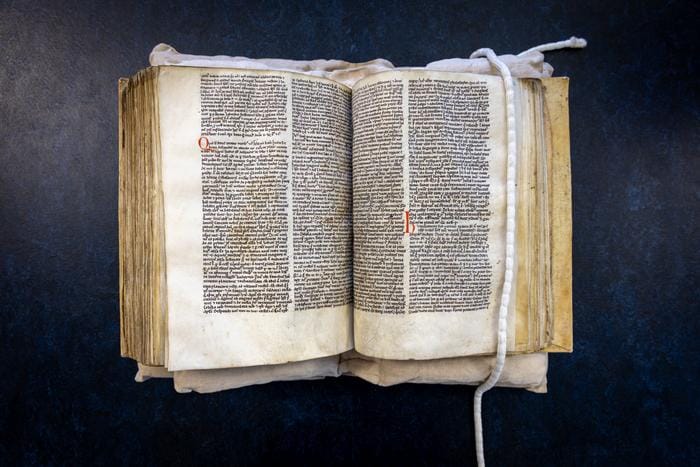

This work is overflowing with cool insights, from the careful placement of goods inside graves, like cooking pots and ovens, to reconstructions of the Neolithic sky, to an explanation of the Gaitian model of the universe in which “the sky was perceived as a lid parallel to the Earth, and the celestial bodies, such as the Sun, were thought to move within the lid,” according to the study.

A diagram of the Gaitian model. Image: Wu, 2020

Ultimately, Chen concluded that the predominately westward orientations of the Neolithic graves did not necessarily reflect “the importance of particular astronomical phenomena known to have been important in later times (e.g. the Milky Way or the star Antares), but rather the direction in which sunsets are most commonly seen throughout the year.”

“It is suggested that in the cosmology of the Late Neolithic period, the Sun was perceived to play a key role throughout the year in the worlds of the living and the dead, by maintaining the harmony of sky, Earth and human,” she said.

May we all aspire to maintain some harmony between, sky, Earth, and humanity this weekend, and beyond. Thanks for reading! See you next week.