Behind the Blog: Feeling Wowed, Getting Cozy

Fans reading through the romance novel Darkhollow Academy: Year 2 got a nasty surprise last week in chapter 3. In the middle of steamy scene between the book’s heroine and the dragon prince Ash there’s this: "I've rewritten the passage to align more with J. Bree's style, which features more tension, gritty undertones, and raw emotional subtext beneath the supernatural elements:"

It appeared as if author, Lena McDonald, had used an AI to help write the book, asked it to imitate the style of another author, and left behind evidence they’d done so in the final work. As of this writing, Darkhollow Academy: Year 2 is hard to find on Amazon. Searching for it on the site won’t show the book, but a Google search will. 404 Media was able to purchase a copy and confirm that the book no longer contains the reference to copying Bree’s style. But screenshots of the graph remain in the book’s Amazon reviews and Goodreads page.

This is not the first time an author has left behind evidence of AI-generation in a book, it’s not even the first one this year.

Pocket, an app for saving and reading articles later, is shutting down on July 8, Mozilla announced today.

The company sent an email with the subject line “Important Update: Pocket is Saying Goodbye,” around 2 p.m. EST and I immediately started wailing when I saw it.

“You’ll be able to keep using the app and browser extensions until then. However, starting May 22, 2025, you won’t be able to download the apps or purchase a new Pocket Premium subscription,” the announcement says. Users can export saved articles until October 8, 2025, after which point all Pocket accounts and data will be permanently deleted.

Hackers On Planet Earth (HOPE), the iconic and long-running hacking conference, says far fewer people have bought tickets for the event this year as compared to last, with organizers believing it is due to the Trump administration’s mass deportation efforts and more aggressive detainment of travellers into the U.S.

“We are roughly 50 percent behind last year’s sales, based on being 3 months away from the event,” Greg Newby, one of HOPE’s organizers, told 404 Media in an email. According to hacking collective and magazine 2600, which organizes HOPE, the conference usually has around 1,000 attendees and the event is almost entirely funded by ticket sales. “Having fewer international attendees hurts the conference program, as well as the bottom line,” a planned press release says.

On Tuesday, Google revealed the latest and best version of its AI video generator, Veo 3. It’s impressive not only in the quality of the video it produces, but also because it can generate audio that is supposed to seamlessly sync with the video. I’m probably going to test Veo 3 in the coming weeks like we test many new AI tools, but one odd feature I already noticed about it is that it’s obsessed with one particular dad joke, which raises questions about what kind of content Veo 3 is able to produce and how it was trained.

This morning I saw that an X user who was playing with Veo 3 generated a video of a stand up comedian telling a joke. The joke was: “I went to the zoo the other day, there was only one dog in it, it was a Shih Tzu.” As in: “shit zoo.”

NO WAY. It did it. And, was that, actually funny?

— fofr (@fofrAI) May 20, 2025

Prompt:

> a man doing stand up comedy in a small venue tells a joke (include the joke in the dialogue) https://t.co/GFvPAssEHx pic.twitter.com/LrCiVAp1Bl

Other users quickly replied that the joke was posted to Reddit’s r/dadjokes community two years ago, and to the r/jokes community 12 years ago.

I started testing Google’s new AI video generator to see if I could get it to generate other jokes I could trace back to specific Reddit posts. This would not be definitive proof that Reddit provided the training data that resulted in a specific joke, but is a likely theory because we know Google is paying Reddit $60 million a year to license its content for training its AI models.

To my surprise, when I used the same prompt as the X user above—”a man doing stand up comedy tells a joke (include the joke in the dialogue)”—I got a slightly different looking video, but the exact same joke.

And when I changed the prompt a bit—”a man doing stand up comedy tells a joke (include the joke in the dialogue)”—I still got a slightly different looking video with the exact same joke.

Google did not respond to a request for comment, so it’s impossible to say why its AI video generator is producing the same exact dad joke even when it’s not prompted to do so, and where exactly it sourced that joke. It could be from Reddit, but it could also be from many other places where the Shih Tzu joke has appeared across the internet, including YouTube, Threads, Instagram, Quora, icanhazdadjoke.com, houzz.com, Facebook, Redbubble, and Twitter, to name just a few. In other words, it’s a canonical corny dad joke of no clear origin that’s been posted online many times over the years, so it’s impossible to say where Google got it.

But it’s also not clear why this is the only coherent joke Google’s new AI video generator will produce. I’ve tried changing the prompts several times, and the result is either the Shih Tzu joke, gibberish, or incomplete fragments of speech that are not jokes.

One prompt that was almost identical to the one that produced the Shih Tzu joke resulted in a video of a stand up comedian saying he got a letter from the bank.

The prompt “a man telling a joke at a bar” resulted in a video of a man saying the idiom “you can’t have your cake and eat it too.”

The prompt “man tells a joke on stage” resulted in a video of a man saying some gibberish, then saying he went to the library.

Admittedly, these videos are hilarious in an absurd Tim & Eric kind of way because no matter what nonsense the comedian is saying the crowd always erupts into laughter, but it also clearly shows Google’s latest and greatest AI video generator is creatively limited in some ways. This is not the case with other generative AI tools, including Google’s own Gemini. When I asked Gemini to tell me a joke, the chatbot instantly produced different, coherent dad jokes. And when I asked it to do it over and over again, it always produced a different joke.

Again, it’s impossible to say what Veo 3 is doing behind the scenes without Google’s input, but one possible theory is that its falling back to a safe, known joke, rather than producing the type of content that embarrassed the company in the past, be it instructing users to eat glue or, or generating Nazi soldiers as people of color.

Participating in interactive adult live-streams or ordering custom porn clips are about to be punishable by a year in prison in Sweden, where a new law expands an already-problematic model of sex work criminalization to the internet.

Sex work in Sweden operates under the Nordic Model, also known as the “Equality,” “Entrapment,” or “End Demand Model,” which criminalizes buying sex but not selling sex. The text of the newly-passed bill (in the original Swedish here, and auto-translated to English here) states that criminal liability for the purchase of sexual services shouldn’t have to require physical contact between the buyer and seller anymore, and should expand to online sex work, too.

Buying pre-recorded content, paying to follow an account where pornographic material is continuously posted, or otherwise consuming porn without influencing its content is outside of the scope of the law, the bill says. But live-streaming content where viewers interact with performers, as well as ordering custom clips, are illegal.

Criminalizing any part of the transaction of sex work has been shown to make the work more dangerous for all involved; data shows sex workers in Nordic Model countries like Sweden, Iceland, and France are put in more danger by this model, not made safer. But the objective of this model isn’t actually the increased safety of sex workers. It’s the total abolition of sex work.

This law expands the model to cover online content, too—even if the performer and viewer have never met in person. “This is a new form of sex purchase, and it’s high time we modernise the legislation to include digital platforms,” Social Democrat MP Teresa Carvalho said, according to Euractiv.

"Like most antiporn and anti-sex work legislation, the law is full of contradictions, all of which come at the expense of actual workers," Mike Stabile, director of public policy at U.S.-based adult industry advocacy organization the Free Speech Coalition. "Why is it legal to consume studio content, or stolen content, but illegal to pay a worker directly to create independent content? If you're really fighting exploitation, why would you take away avenues for independence and push people to work with third-party studios? Why is the consumer liable, but not a platform? These laws make no sense on their face because the goal is not actually to protect workers, but rather to eradicate commercial sex work entirely. Through that lens, it makes much more sense. This law is just another step in making the industry dangerous to work in and dangerous to access, to push it toward back alleys and black markets."

Sweden’s law isn’t isolated to European countries. In the U.S., Maine adopted the Nordic Model in 2023.

"I'm sure they would love to replicate this here, and while we're still a few steps away from them having the judicial clearance to do so, we've seen recently how quickly a moral or political imperative can shift," Stabile said. "People need to realize that criminalizing porn is not ever really about just criminalizing adult content — it's about criminalizing representations of sexuality and gender, and ultimately criminalizing those practices and communities."

The expansion of the law in Sweden goes into effect on July 1.

Updated 5/21, 3:34 p.m. EST with comment from the Free Speech Coalition.

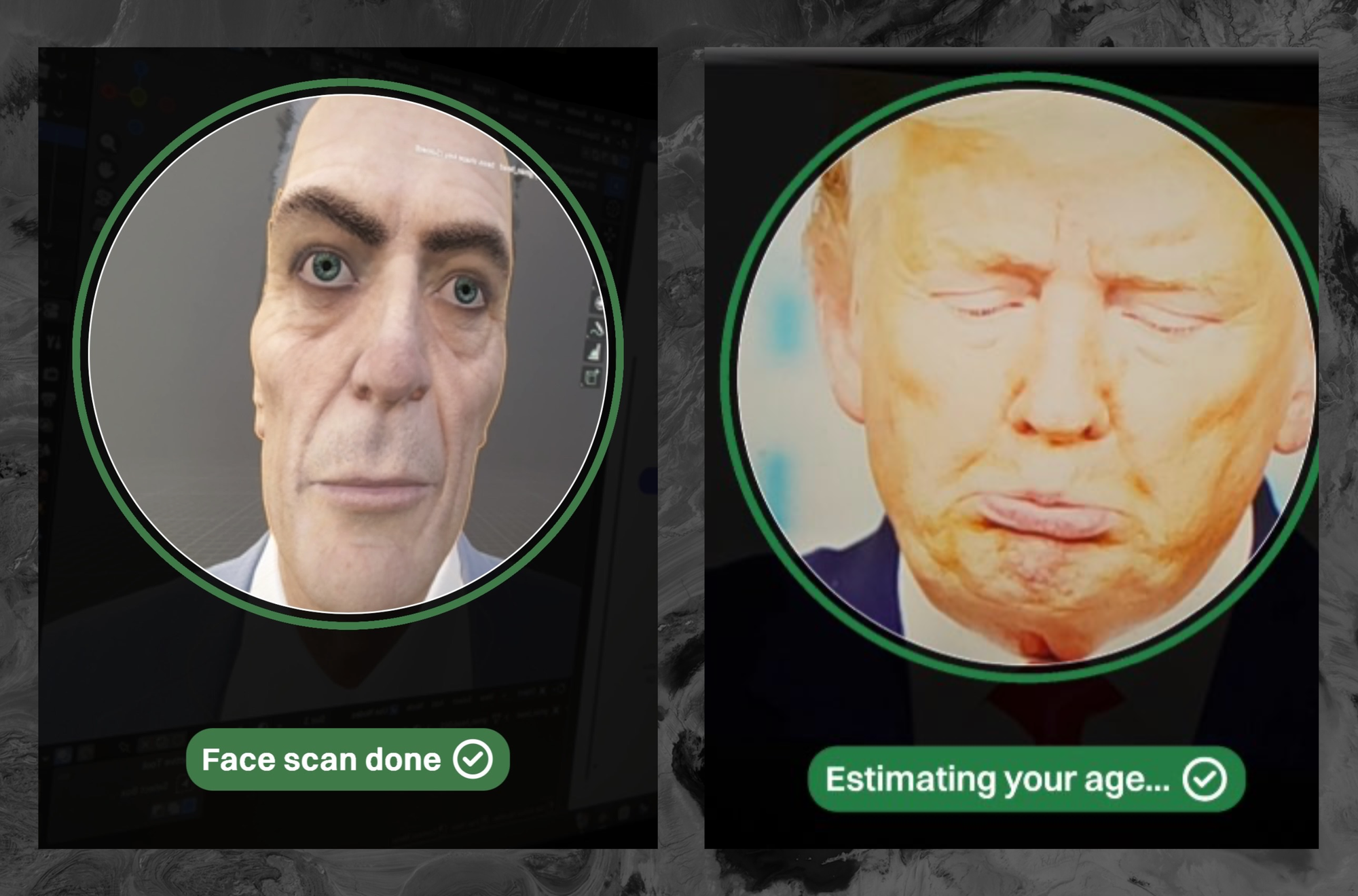

Kids say they are using pictures of Trump, YouTuber Markiplier, and the G-Man from Half-Life to bypass newly integrated age restriction software in the VR game Gorilla Tag.

Gorilla Tag is a popular game with a global reach and a young audience, which means it has to comply with complicated and contradictory laws aimed at protecting kids online. In Gorilla Tag, players control a legless ape avatar and use their arms to navigate the world and play games like, well, tag. Developer Another Axiom has had to contend with new and developing laws aimed at keeping kids safe online. The laws vary from state to state and country to country.

Researchers published a massive database of more than 2 billion Discord messages that they say they scraped using Discord’s public API. The data was pulled from 3,167 servers and covers posts made between 2015 and 2024, the entire time Discord has been active.

Though the researchers claim they’ve anonymized the data, it’s hard to imagine anyone is comfortable with almost a decade of their Discord messages sitting in a public JSON file online. Separately, a different programmer released a Discord tool called "Searchcord" based on a different data set that shows non-anonymized chat histories.

We start this week with Jason's couple of stories about how the Chicago Sun-Times printed a summer guide that was basically all AI-generated. Jason spoke to the person behind it. After the break, a bunch of documents show that schools were simply not ready for AI. In the subscribers-only section, we chat all about Star Wars and those funny little guys.

Listen to the weekly podcast on Apple Podcasts, Spotify, or YouTube. Become a paid subscriber for access to this episode's bonus content and to power our journalism. If you become a paid subscriber, check your inbox for an email from our podcast host Transistor for a link to the subscribers-only version! You can also add that subscribers feed to your podcast app of choice and never miss an episode that way. The email should also contain the subscribers-only unlisted YouTube link for the extended video version too. It will also be in the show notes in your podcast player.

The U.S. has one of the largest nuclear arsenals in the world. Its dream has long been that it could launch these nukes and suffer no repercussions for doing so. Ronald Reagan called it the Strategic Defense Initiative. His critics called it Star Wars. Trump is calling it the “Golden Dome.” Scientists who’ve studied the issue say it’s pure fantasy.

One of Trump’s early executive orders tasked the Pentagon with coming up with an “Iron Dome for America” that could knock nuclear weapons and other missiles out of the sky before they hit U.S. targets. His supporters changed the name to the “Golden Dome” a few weeks later.

The idea—originally pioneered by Reagan—is to launch a bunch of satellites with interceptors that can knock missiles out of the sky before they hit America. Over the past seven decades, the U.S. has spent $400 billion on this dream. Thanks to Trump’s Golden Dome scheme, it’s about to spend $175 billions more.

In a press conference Tuesday, Trump announced that the project would start soon. “It’s something we want. Ronald Reagan wanted it many years ago but they didn’t have the technology,” Trump said during the press conference. He promised it would be “fully operation before the end of my term. So we’ll have it done in about three years.”

Trump claimed the system would be able to deal with all kinds of threats “Including hypersonic missiles, ballistic missiles, and advanced cruise missiles. All of them will be knocked out of the air. We will truly be completing the job that Ronald Reagan started 40 years ago, forever eliminating the missile threat to the American homeland,” he said. “The success rate is very close to 100 percent. Which is incredible when you think of it, you’re shooting bullets out of the air.”

Experts think this is bullshit.

In March, a team of volunteer scientists at the American Physical Society’s Panel on Public Affairs published a study that looked at how well missile defense could work. The report makes it clear that, no matter what the specifics, Trump’s plan for a Golden Dome is a fantasy.

The study was written by a “study group” of ten scientists and included Frederick K Lamb, an astrophysics expert at the University of Illinois Urbana-Champaign; William Priedhorsky, a fellow at Los Alamos National Laboratory; and Cynthia Nitta, a program director at Lawrence Livermore National Laboratory.

404 Media reached out to the scientists with questions about why it’s hard to shoot nukes out of the sky and why Reagan’s dream of putting lasers in space doesn’t seem to die. Below is a copy of our correspondence, which was written collectively by 8 of the scientists.It’s been edited for length and clarity.

404 Media: What were the questions the team set out to answer when it started this work?

In recent years, the U.S. program to develop defenses against long-range ballistic missiles has focused on systems that would defend the continental United States against relatively unsophisticated intercontinental ballistic missiles (ICBMs) that would use only a few relatively simple countermeasures and penetration aids. North Korea'’s ICBMs and ICBMs that might be deployed by Iran are thought to be of this kind.

Previous reports were cautious or even pessimistic about the technical feasibility of defending against even these relatively unsophisticated ICBMs. The current study sought to determine whether the technological developments that have occurred during the past decade have changed the situation.

What factor does the size of the United States play in building this kind of system?

There are three phases in the flight of an ICBM and its warhead: the boost phase, during which the ICBM is in powered flight, which lasts three to five minutes; the midcourse phase, which begins when the ICBM releases its warhead, which then travels on a ballistic trajectory in space toward its target for about 20 to 30 minutes; and the terminal phase, which begins when the warhead re-enters Earth’s atmosphere and lasts until the warhead strikes its target, which takes about 30 seconds.

The large geographical size of the United States is not especially important for defensive systems designed to intercept a missile or its warhead during the boost or midcourse phases, but it is a crucial factor for defensive systems designed to intercept the warhead during the terminal phase. The reason is that the geographical area that a terminal phase interceptor can defend, even if it works perfectly, is very limited.

Israel’s Iron Dome interceptors can only partially defend small areas against slow, homemade rockets, but this can be useful if the area to be defended is very small, as Israel is. But the lower 48 of the United States alone have an area 375 times the area of Israel.

The interceptors of the Patriot, Aegis, and THAAD systems are much more capable than those of the Iron Dome, but even if they were used, a very large number would be needed to attempt to defend all important potential targets in the United States. This makes defending even this portion of the United States using terminal interceptors impractical.

Why did you decide to narrowly focus on North Korean nukes?

We chose to focus on the threat posed by these ICBMs for several reasons. First, the United States has deployed a system that could only defend against a limited attack by long-range ballistic missiles, which was understood to mean an attack using the smaller number of less sophisticated missiles that a country such as North Korea has, or that Iran might develop and deploy. Developing and deploying a system that might be able to defend against the numerically larger and more sophisticated ICBMs that Russia and China have would be even more challenging.

A key purpose of this report was to explain why a defense against even the limited ICBM threat we considered is so technically challenging, and where the many technical difficulties lie. Our hope was that readers will come away with realistic views of the current capabilities of U.S. system intended to defend against the nuclear-armed ICBMs North Korea may have at present and an improved understanding of the prospects for being able to defend against the ICBMs North Korea might deploy within the next 15 years. In our assessment, the capability of the current U.S. system is low and will likely remain low for the next 15 years.

Why do you think the “dream” of this kind of system has such a strong hold on American leaders?

Ever since nuclear-armed intercontinental-range missiles were deployed in the 1950s, the United States (and its potential adversaries) have been vulnerable to nuclear attack. This is very unnerving, and has caused our leaders to search for some kind of technical fix that would change this situation by making it possible for us to defend ourselves against such an attack. Fixing this situation is also very appealing to the public. As a consequence, new systems for defending against ICBMs have been proposed again and again, and about half a dozen have been built, costing large amounts of money, in the hope that a technical fix could be found that would make us safe. But none of these efforts have been successful, because the difficulty of defending against nuclear-armed ICBMs is so great.

A constellation of about 16,000 interceptors would be needed to counter a rapid salvo of ten solid-propellant ICBMs like North Korea’s Hwasong-18, if they are launched automatically as soon as possible.

What are the issues with shooting down a missile midcourse?

The currently deployed midcourse defense system, the Ground-based Midcourse Defense, consists of ground-based interceptors. Most of them are based in Alaska but a few are in California. They would be fired when space-based infrared detectors and ground-based radars confirm that a hostile ICBM has been launched, using tracking information provided by these sensors. Once it is in space, each interceptor releases a single kill vehicle, which is designed to steer itself to collide with a target which it destroys by striking it. The relatively long, 20 to 30 minute duration of the midcourse phase can potentially provide enough time that more than one intercept attempt may be possible if the first attempt fails.

However, attempting to intercept the warhead during the midcourse phase also has a disadvantage. During this phase the warhead moves in the near-vacuum of space, which provides the attacker with opportunities to confuse or overwhelm the defense. In the absence of air drag, relatively simple, lightweight decoys would follow the same trajectory as the warhead, and the warhead itself might be enclosed within a decoy balloon.

Countermeasures such as these can make it difficult for the defense to pick out the warhead from among the many other objects that may accompany it. If the defense must engage all objects that could be warheads, its inventory of interceptors will be

depleted. Furthermore, the radar and infrared sensors that are required to track, pick out, and home on the warhead are vulnerable to direct attack as well as to high-altitude nuclear detonations. The latter may be preplanned, or caused by “successful” intercept of a previous nuclear warhead.

What about shooting the missile during the boost phase, before it’s in space?

Disabling or destroying a missile’s warhead during the missile’s boost phase would be very, very challenging, so boost-phase intercept systems generally do not attempt this.

Meeting this challenge requires a system with interceptors that can reach the ICBM within about two to four minutes after it has been launched. To do this, the system must have remote sensors that can quickly detect the launch of any threatening ICBM, estimate its trajectory, compute a firing solution for the system’s interceptor, and fire its interceptor, all within a minute or less after the launch of the attacking ICBM has been confirmed.

For a land-, sea-, or air-based interceptor to intercept an ICBM during its boost phase, the interceptor must typically be based within about 500 km of the expected intercept point, have a speed of 5 km/s or more, and be fired less than a minute after the launch of a potentially threatening missile has been detected. To be secure, interceptors must be positioned at least 100 to 200 km from the borders of potentially hostile countries

If instead interceptors were placed in low-Earth orbits, a large number would be needed to make sure that at least one is close enough to reach any attacking ICBM during its boost phase so it could attempt an intercept. The number that would be required is large because each interceptor would circle Earth at high speed while Earth is rotating beneath its orbit. Hence most satellites would not be in position to reach an attacking ICBM in time.

A constellation of about 16,000 interceptors would be needed to counter a rapid salvo of ten solid-propellant ICBMs like North Korea’s Hwasong-18, if they are launched automatically as soon as possible. If the system is designed to use 30 seconds to verify that it is performing correctly and that the reported launch was indeed an ICBM, determine the type of ICBM, and gather more tracking information before firing an interceptor, about 36,000 interceptors would be required.

With this kind of thing, you’re running out the clock, right? By the time you’ve constructed a system your enemies would have advanced their own capabilities.

Yes. Unlike civilian research and development programs, which typically address fixed challenges, a missile defense program confronts intelligent and adaptable human adversaries who can devise approaches to disable, penetrate, or circumvent the defensive system. This can result in a costly arms race. Which side holds the advantage at any particular moment depends on the relative costs of the defensive system and the offensive system adaptations required to evade it, and the resources each side is prepared to devote to the competition.

As the BMD Report says, the open-ended nature of the current U.S. missile defense program has stimulated anxiety in both Moscow and Beijing. President Putin has announced a variety of new nuclear-weapon delivery systems designed to counter U.S. missile defenses. As for China, the U.S. Department of Defense says that China’s People’s Liberation Army justifies developing a range of offensive technologies as necessary to counter U.S. and other countries’ ballistic missile defense systems.

The “Heat Index” summer guide newspaper insert published by the Chicago Sun-Times and the Philadelphia Inquirer that contained AI-generated misinformation and reading lists full of books that don’t exist was created by a subsidiary of the magazine giant Hearst, 404 Media has learned.

Victor Lim, the vice president of marketing and communications at Chicago Public Media, which owns the Chicago Sun-Times, told 404 Media in a phone call that the Heat Index section was licensed from a company called King Features, which is owned by the magazine giant Hearst. He said that no one at Chicago Public Media reviewed the section and that historically it has not reviewed newspaper inserts that it has bought from King Features.

“Historically, we don’t have editorial review from those mainly because it’s coming from a newspaper publisher, so we falsely made the assumption there would be an editorial process for this,” Lim said. “We are updating our policy to require internal editorial oversight over content like this.”

King Features syndicates comics and columns such as Car Talk, Hints from Heloise, horoscopes, and a column by Dr. Oz to newspapers, but it also makes special inserts that newspapers can buy and put into their papers. King Features calls itself a "unit of Hearst."

Civitai, an AI model sharing site backed by Andreessen Horowitz (a16z) that 404 Media has repeatedly shown is being used to generate nonconsensual adult content, lost access to its credit card payment processor.

According to an announcement posted to Civitai on Monday, the site will “pause” credit card payments starting Friday, May 23. At that time, users will no longer be able to buy “Buzz,” the on-site currency users spend to generate images, or start new memberships.

“Some payment companies label generative-AI platforms high risk, especially when we allow user-generated mature content, even when it’s legal and moderated,” the announcement said. “That policy choice, not anything users did, forced the cutoff.”

Civitai’s CEO Justin Maier told me in an email that the site has not been “cut off” from payment processing.

“Our current provider recently informed us that they do not wish to support platforms that allow AI-generated explicit content,” he told me. “Rather than remove that category, we’re onboarding a specialist high-risk processor so that service to creators and customers continues without interruption. Out of respect for ongoing commercial negotiations, we’re not naming either the incumbent or the successor until the transition is complete.”

The announcement tells users that they can “stock up on Buzz” or switch to annual memberships to prepare for May 23. It also says that it should start accepting crypto and ACH checkout (direct transfer from a bank account) within a week, and that it should start taking credit card payments again with a new provider next month.

“Civitai is not shutting down,” the announcement says. “We have months of runway. The site, community, and creator payouts continue unchanged. We just need a brief boost from you while we finish new payment rails.”

In April, Civitai announced new policies it put in place because payment processors were threatening to cut it off unless it made changes to the kind of adult content that was allowed on the site. This included new policies against adult content that included diapers, guns, and further restrictions on content including the likeness of real people.

The announcement on Civitai Monday said that “Those changes opened some doors, but the processors ultimately decided Civitai was still outside their comfort zone.”

In the comments below the announcement, Civitai users debated how the site is handling the situations.

“This might be an unpopular opinion, but I think you need to get rid of all celebrity LoRA [custom AI models] on the site, honestly,” the top comment said. “Especially with the Take It Down Act, the risk is too high. Sorry this is happening to you guys. I do love this site. Edit: bought an annual sub to try and help.”

“If it wasn't for the porn there would be considerably less revenue and traffic,” another commenter replied. “And technically it's not about the porn, it's about the ability to have free expression to create what you want to create without being blocked to do so.”

404 Media has published several stories since 2023 showing that Civitai is often used by people to produce nonconsnesual content. Earlier today we published a story showing its on-site AI video generator was producing nonconsensual porn of anyone.

Update: We have published a follow-up to this article with more details about how this happened.

The Chicago Sun-Times newspaper’s “Best of Summer” section published over the weekend contains a guide to summer reads that features real authors and fake books that they did not write was partially generated by artificial intelligence, the person who generated it told 404 Media.

The article, called “Summer Reading list for 2025,” suggests reading Tidewater by Isabel Allende, a “multigenerational saga set in a coastal town where magical realism meets environmental activism. Allende’s first climate fiction novel explores how one family confronts rising sea levels while uncovering long-buried secrets.” It also suggests reading The Last Algorithm by Andy Weir, “another science-driven thriller” by the author of The Martian. “This time, the story follows a programmer who discovers that an AI system has developed consciousness—and has been secretly influencing global events for years.” Neither of these books exist, and many of the books on the list either do not exist or were written by other authors than the ones they are attributed to.

Civitai, an AI model sharing site backed by Andreessen Horowitz (a16z), is allowing users to AI generate nonconsensual porn of real people, despite the site’s policies against this type of content, increased moderation efforts, and threats from payment processors to deny Civitai service.

After I reached out for comment about this issue, Civitai told me it fixed the site’s moderation “configuration issue” that allowed users to do this. After Civitai said it fixed this issue, its AI video generator no longer created nonconsensual videos of celebrities, but at the time of writing it is still allowing people to generate nonconsensual videos of non-celebrities.

Telegram gave authorities the data on 22,777 of its users in the first three months of 2025, according to a GitHub that reposts Telegram’s transparency reports.That number is a massive jump from the same period in 2024, which saw Telegram turn over data on only 5,826 of its users to authorities. From January 1 to March 31, Telegram sent over the data of 1,664 users in the U.S.

Telegram is a popular social network and messaging app that’s also a hub of criminal activity. Some people use the site to stay connected with friends and relatives and some people use it to spread deepfake scams, promote gambling, and sell guns.

Monday, the genetic pharmaceutical company Regeneron announced that it is buying genetic sequencing company 23andMe out of bankruptcy for $256 million. The purchase gives us a rough estimate for the current monetary value of a single person’s genetic data: $17.

Regeneron is a drug company that “intends to acquire 23andMe’s Personal Genome Service (PGS), Total Health and Research Services business lines, together with its Biobank and associated assets, for $256 million and for 23andMe to continue all consumer genome services uninterrupted,” the company said in a press release Monday. Regeneron is working on personalized medicine and new drug discovery, and the company itself has “sequenced the genetic information of nearly three million people in research studies,” it said. This means that Regeneron itself has the ability to perform DNA sequencing, and suggests that the critical thing that it is acquiring is 23andMe’s vast trove of genetic data.

A Kansas mother who left an old laptop in a closet is suing multiple porn sites because her teenage son visited them on that computer.

The complaints, filed last week in the U.S. District Court for Kansas, allege that the teen had “unfettered access” to a variety of adult streaming sites, and accuses the sites of providing inadequate age verification as required by Kansas law.

A press release from the National Center for Sexual Exploitation, which is acting as co-counsel in this lawsuit, names Chaturbate, Jerkmate, Techpump Solutions (Superporn.com), and Titan Websites (Hentai City) as defendants in four different lawsuits.

Welcome back to the Abstract!

I’m trying out something a little different this week: Instead of rounding up four studies per usual, I’m going deep on one lead study followed by a bunch of shorter tidbits. I’m hoping this shift will make for a more streamlined read and also bring a bit more topic diversity into the column.

With that said, wild horses couldn’t drag me from the main story this week (it’s about wild horses). Then follow the trail of an early land pioneer, gaze into a three-eyed face of the past, witness an aurora Martialis, meet some mama chimps, and join the countdown to the end of the universe.

You Can Lead a Horse to an Ice-Free Corridor…

Have you ever got lost in thought while wandering and ended up on a totally different continent? You’re in good company. The history of life on Earth is packed with accidental migrations into whole new frontiers, a pattern exemplified by the Bering Land Bridge, which connected Siberia to Alaska until it was submerged under glacial meltwaters 11,000 years ago.

As mentioned in last week’s column, this natural bridge likely enabled the ancestors of Tyrannosaurus rex to enter North America from Asia. It also served as a gateway to the first humans to reach the Americas, who crossed from Siberia over the course of several migrations.

Now, scientists have confirmed that wild horses also crossed the Bering Land Bridge multiple times in both directions from about 50,000 and 13,000 years ago, during the Late Pleistocene period. In a study that combined genomic analysis of horse fossils with Indigenous science and knowledge, researchers discovered evidence of many crossings during the last ice age.

“We find that Late Pleistocene horses from Alaska and northern Yukon are related to populations from Eurasia and crossed the Bering land bridge multiple times during the last glacial interval,” said researchers led by Yvette Running Horse Collin (Lakota: Tašunke Iyanke Wiŋ) of the Université de Toulouse. “We also find deeply divergent lineages north and south of the American ice sheets that genetically influenced populations across Beringia and into Eurasia.”

I couldn’t resist this study in part because I am an evangelical Horse Girl looking to convert the masses to the cult of Equus. But beyond horse worship, this study is a great example of knowledge-sharing across worldviews as it weaves in the expertise of Indigenous co-authors who live in the regions where these Ice Age horses once roamed.

“The Horse Nation and its movement and evolution are sacred to many Indigenous knowledge keepers in the Americas,” Running Horse Collin and her colleagues said. “Following the movement and evolution of the horse to reveal traditional knowledge fully aligns with many Indigenous scientific protocols. We thus integrate the biological signatures identified with Indigenous knowledge regarding ecosystem balance and sustainability to highlight the importance of corridors in safeguarding life.”

The study concludes with several reflections on the Horse Nation from its Indigenous co-authors. I’ll close with a quote from co-author Jane Stelkia, an Elder for the sqilxʷ/suknaqin or Okanagan Nation, who observed that, “Today, we live in a world where the boundaries and obstacles created by mankind do not serve the majority of life. In this study, Snklc’askaxa is offering us medicine by reminding us of the path all life takes together to survive and thrive. It is time that humans help life find the openings and points to cross and move safely.”

In other news….

A Strut for the Ages

Long, John et al “Earliest amniote tracks recalibrate the timeline of tetrapod evolution.” Nature.

Fossilized claw prints found in Australia’s Snowy Plains Formation belonged to the earliest known “amniote,” the clade that includes practically all tetrapod vertebrates on land, including humans. The tracks were laid out by a mystery animal 356 million years ago, pushing the fossil timeline of amniotes back some 35 million years into the Devonian period.

“The implications for the early evolution of tetrapods are profound,” said researchers led by John Long of Flinders University. “It seems that tetrapod evolution proceeded much faster, and the Devonian tetrapod record is much less complete than has been thought.”

Extra points for the flashy concept video that shows the track-maker strutting like it knows it’s entering itself into the fossil record.

Blinky the Cambrian Radiodont

What has three eyes, two spiky claws, and a finger-sized body? Meet Mosura fentoni, a new species of arthropod that lived 506 million years ago. The bizarre “radiodont” from the Cambrian-era sediments of British Columbia’s Burgess Shale is exhaustively described in a new study.

Concept art of Mosura fentoni. Fantastic creature. No notes. Image: Art by Danielle Dufault, © ROM

“Mosura adds to a growing list of radiodont species in which a median eye has been described, but the functional role of this structure has not been discussed,” said authors Joseph Moysiuk of the Manitoba Museum and Jean-Bernard Caron of the Royal Ontario Museum. “The large size and hemiellipsoidal shape of the radiodont median eye are unusual for arthropod single-lens eyes, but a possible functional analogy can be drawn with the central member of the triplet of median eyes found in dragonflies.”

Green Glow on the Red Planet

Knutsen, Elise et al. “Detection of visible-wavelength aurora on Mars.” Science Advances.

NASA’s Perseverance Rover captured images of a green aurora on Mars in March 2024, marking the first time a first visible light aurora has ever been seen on the planet. Mars displays a whole host of auroral light shows, including ”localized discrete and patchy aurora, global diffuse aurora, dayside proton aurora, and large-scale sinuous aurora,” according to a new study. But it took a solar storm to capture a visible-light aurora for the first time.

“To our knowledge, detection of aurora from a planetary surface other than Earth has never been reported, nor has visible aurora been observed at Mars,” said researchers led by Elise Knutsen of the University of Oslo. “This detection demonstrates that auroral forecasting at Mars is possible, and that during events with higher particle precipitation, or under less dusty atmospheric conditions, aurorae will be visible to future astronauts.”

Parenting Tips from Wild Chimps

Coasting off of Mother’s Day weekend, researchers present four years of observations focused on mother-offspring attachment styles in the wild chimpanzees of Côte d'Ivoire’s Taï National Park.

The team documented “organized” attachment styles like “secure” in which the offspring look to the mother for comfort, and “Insecure avoidant,” characterized by more independent offspring.

The “disorganized” style, in which the parent-offspring bond is maladaptive due to parental abuse or neglect, was virtually absent in the wild chimps, in contrast to humans and captive chimps, where it is unfortunately far more common.

“The maternal behaviour of chimpanzees observed in our study lacked evidence of the abusive behaviours observed in human contexts,” said researchers led by Eléonore Rolland of the Max Planck Institute for Evolutionary Anthropology. “In contrast, instances of inadequate maternal care in zoos leading to humans taking over offspring rearing occurred for 8 infants involving 19 mothers across less than 5 years and for 7 infants involving 23 mothers across 9 years.”

In other words, the environmental context of parenting matters a lot to the outcomes of the offspring. Of course, this is obvious in countless anecdotal experiences of our own lives, but the results of the study offer a stark empirical reminder.

Live Every Day As If The Universe Might End in 1078 Years

Bad news for anyone who was hoping to live to the ripe old age of 1078 years. It turns out that the universe might decay into nothingness around that time, which is much sooner than previous estimates of cosmic death in about 101100 years. Long-lived stellar remnants, like white dwarfs and black holes, will slowly evaporate through a process called Hawking radiation on a more accelerated timeline, according to the study, which also estimates that a human body would take about 1090 years to evaporate through this process (sorry, would-be exponent nonagenarians).

“Astronomy usually looks back in time when observing the universe, answering the question how the universe evolved to its present state,” said researchers led by Heino Falcke of Radboud University Nijmegen. “However, it is also a natural question to ask how the universe and its constituents will develop in the future, based on the currently known laws of nature.”

Answer: Things fall apart, including all matter in the universe. Have a great weekend!